texticide

v1.1.0

Published

Identify and Nuke text fragments based using Regular Expresions.

Maintainers

Readme

Texticide.

Texticide.js is a JavaScript library for identifying all fragments of a given text that match a given regular expression and provides extra functionality for editing out said fragments. Texticide.js has zer0 dependencies and works standalone.

Installation.

CDN.

Deliver the package via cdn:

- jsdelivr:

https://cdn.jsdelivr.net/npm/texticide - unpkg:

https://unpkg.com/texticide

NPM.

To install with NPM run:

npm install texticideEmbedded.

Include the file texticide.min.js in your project directory. Then:

Browser:

<script src="path/to/texticide.min.js">Node:

const {Diction, Sanitizer} = require("path/to/texticide.min.js");

ES6 Module.

In the build directory is an es6 module of the library. The main|default import of the module id the Texticide object itself:

import Texticide from "texticide.min.mjs"; // Use this in Deno

import {Diction, Sanitizer} from "texticide.min.mjs";RequireJS.

requirejs(["path/to/texticide.min.js"], function(Texticide) {

const {Diction, Sanitizer} = Texticide;

// Do yo stuff over here

});Usage.

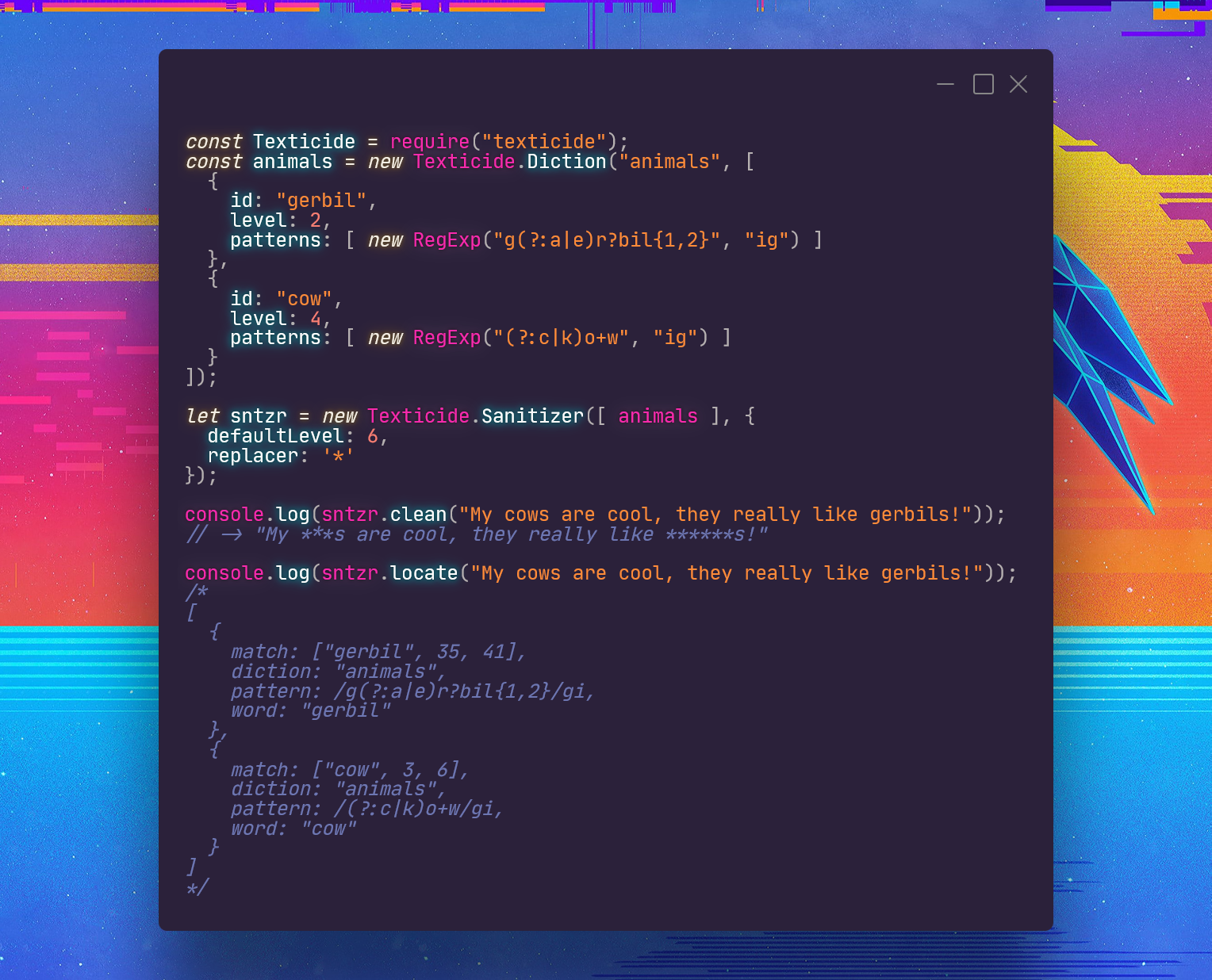

The Texticide object has two main classes as methods, Diction and Sanitizer. The Diction class is used to build a set of recognizable words. A word here has id (identifier), level (for filtering) and patterns (array of regular expressions) parameters. The Sanitizer builds the functionality to locate and clean any words from a piece of text.

Example.

Lets say we have an array of animal related sentences in a file( example.json ). We want to send it to Mars and it turns out martians don't like animals from earth. So we have to edit out any and all instances of 'animal' words from the sentences.

[

"Such cute gerbils!",

"Stupid cat, scratching the upholstery",

"Alright peter rabbit!",

"My cows are cool. They really like chickens",

"My dog is real cool",

"Your cat is a bitch",

"Get the fuck outta here pig!",

"A dog wandered into our garden one day",

"For I will consider my Cat Jeoffry",

"You’ve read of several kinds of Cat"

]First we need to build our Diction instance, what our Sanitizer can recognize. The first parameter is the name of the instance. This is useful for scoping, seen later. The second parameter is an array of object literals ( words ) that contain id, level and patterns properties. Explained here. patterns, this is because a word can have multiple patterns that may not fit into one Regular Expression hence needing an array of Regular Expressions.

const Texticide = require("texticide");

let animals = new Texticide.Diction("Animals", [

{

id: "gerbil",

level: 2,

patterns: [ new RegExp("g(a|e)r?bil{1,2}", "ig") ]

},

{

id: "cat",

level: 5,

patterns: [ new RegExp("(c|k)at", "ig") ]

},

{

id: "rabbit",

level: 4,

patterns: [ new RegExp("r(a|e)b{1,3}it{1,2}", "ig") ]

},

{

id: "cow",

level: 4,

patterns: [ new RegExp("(c|k)o+w", "ig") ]

},

{

id: "dog",

level: 4,

patterns: [ new RegExp("dog", "ig") ]

},

{

id: "pig",

level: 3,

patterns: [ new RegExp("p(i|y)g+", "ig") ]

}

]);Next we need to build our Sanitizer. The constructor takes two parameters an array of Diction instances, its dictionary, and a config object literal. Its dictionary tells the Sanitizer what words to recognize. This is because it allows one to build multiple sanitizers each with a different dictionary. The second parameter is an object literal with two optional properties, defaultLevel and replacer. The defaultLevel tells the Sanitizer to only hit words with a level equal to or lower than defaultLevel. The replacer can be a function or a string and it tells the Sanitizer.clean how to erase found matches. If a string is given a unit is picked at random from the string and substituted in place for each letter in the match:

Text.___________________________replacer.________Result.

I love dogs.____________________'+'______________I love ++++.

Sadly bacon comes from pigs.____'123'____________Sadly bacon comes from 312.

I am allergic to cats.__________'&-%!'___________I am allergic to &!%-.If a function is used, the function is called with the found text and the return value injected in place.

const replacer = x => x.length;

// I am allergic to any cat. => I am allergic to any 3.

// Sadly bacon comes from piggs. => Sadly bacon comes from 5.

// I love dogs. => I love 4.Now let us build a Sanitizer:

let sntzr = new Texticide.Sanitizer([animals], {

defaultLevel: 4,

replacer: "*"

}); The sntzr has two methods locate and clean. locate returns an array of matches and clean returns a cleaned up string:

const examples = require("./examples.json");

examples.forEach(( sentence ) => {

sntzr.locate(sentence).forEach(find => console.log(sentence, "=>", find));

});Sanitizer.locate returns an object literal with the following properties: (1)match; an array with the actual word found and its location inside of the input string. (2)word; the id of the word that matched the match. (3)pattern; the regex that matched the match. (4)diction; the diction from which the word was declared. Output:

Such cute gerbils! => {

match: [ 'gerbil', 10, 16 ],

diction: 'Animals',

pattern: /g(a|e)r?bil{1,2}/gi,

word: 'gerbil'

}

Get the fuck outta here pig! => {

match: [ 'pig', 24, 27 ],

diction: 'Animals',

pattern: /p(i|y)g+/gi,

word: 'pig'

}Sanitizer.clean simply cleans the given text off of any identified matches, using replacer for replacement:

const examples = require("./examples.json");

examples.forEach(( sentence ) => {

console.log(sntzr.clean(sentence));

});Giving us the output:

Such cute ******s!

Stupid cat, scratching the upholstery

Alright peter rabbit!

My cows are cool. They really like chickens

My dog is real cool

Your cat is a bitch

Get the fuck outta here ***!

A dog wandered into our garden one day

For I will consider my Cat Jeoffry

You’ve read of several kinds of CatBoth Sanitizer.locate and Sanitizer.clean take two extra arguments. The second argument is an explicitly implies a level to be used and the third argument is an array of id's to word to be ignored. For example:

const examples = require("./examples.json");

examples.forEach(( sentence ) => {

console.log(sntzr.clean(sentence, 6, ["cow"]));

});Output:

Such cute ******s! << hits all words, as they are all below 6 >>

Stupid ***, scratching the upholstery

Alright peter ******!

My cows are cool. << ignores cows as it is included in the exclude set (third arg of sntzr.clean) >>

My *** is real cool

Your *** is a bitch

Get the fuck outta here ***!

A *** wandered into our garden one day

For I will consider my *** Jeoffry

You’ve read of several kinds of ***Levels in texticide.

You might have noticed words taking a level attribute in their definition. A level provides some form of filtering functionality. This is used within the Sanitizer.locate and Sanitizer.clean functions to filter out matches. For example, the word dog has a level of 4, gerbil of level 2 and cat of level 5. If we pass to a call of Sanitizer.locate a second parameter of 4, it will ignore all words with levels higher (level > filter) than 5. Therefore, cat will be ignored, as it has a level of 5. For example:

const examples = require("./examples.json");

examples.forEach(( sentence ) => {

console.log(sntzr.clean(sentence, 4));

});

// Such cute ******s!

// Stupid cat, scratching the upholstery

// Alright peter ******!

// My **** are cool.

// My *** is real cool

// Your cat is a bitch

// Get the fuck outta here ***!

// A *** wandered into our garden one day

// For I will consider my Cat Jeoffry

// You’ve read of several kinds of CatTherefore, to match any and all words use a filter of Infinity and match none use a filter of -Infinity.

You can also pre-define the filtering levels for both Sanitizer.locate and Sanitizer.clean functions while building the Sanitizer like this:

let sntzr = new Texticide.Sanitizer([animals], {

defaultLevel: 4,

});

const examples = require("./examples.json");

examples.forEach(( sentence ) => {

console.log(sntzr.clean(sentence, 4));

});

// Such cute ******s!

// Stupid cat, scratching the upholstery

// Alright peter ******!

// My **** are cool.

// My *** is real cool

// Your cat is a bitch

// Get the fuck outta here ***!

// A *** wandered into our garden one day

// For I will consider my Cat Jeoffry

// You’ve read of several kinds of CatExtras.

- Feedback would be much appreciated.

- For any issues, go here.

- I would really enjoy if you dropped me a star 🌟