sec2go

v1.1.0

Published

**SecSecGo provides an alternative way to find quarterly earnings, annual reports and more via a GraphQL API hosted in the cloud or locally.**

Downloads

7

Readme

Set up your own SecSecGo API:

SecSecGo provides an alternative way to find quarterly earnings, annual reports and more via a GraphQL API hosted in the cloud or locally.

Easy way to retrieve SEC filings for any US based company No more filtering through index files, effectively reducing the system Simplifies EDGAR querying by use of a single API endpoint, eliminating multiple data requests Has automated SEC EDGAR data to DB import functionality

Things you can do with SecSecGo:

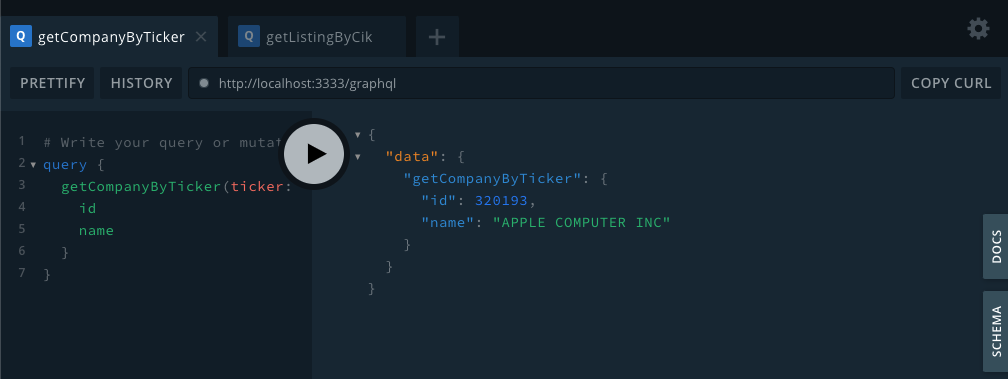

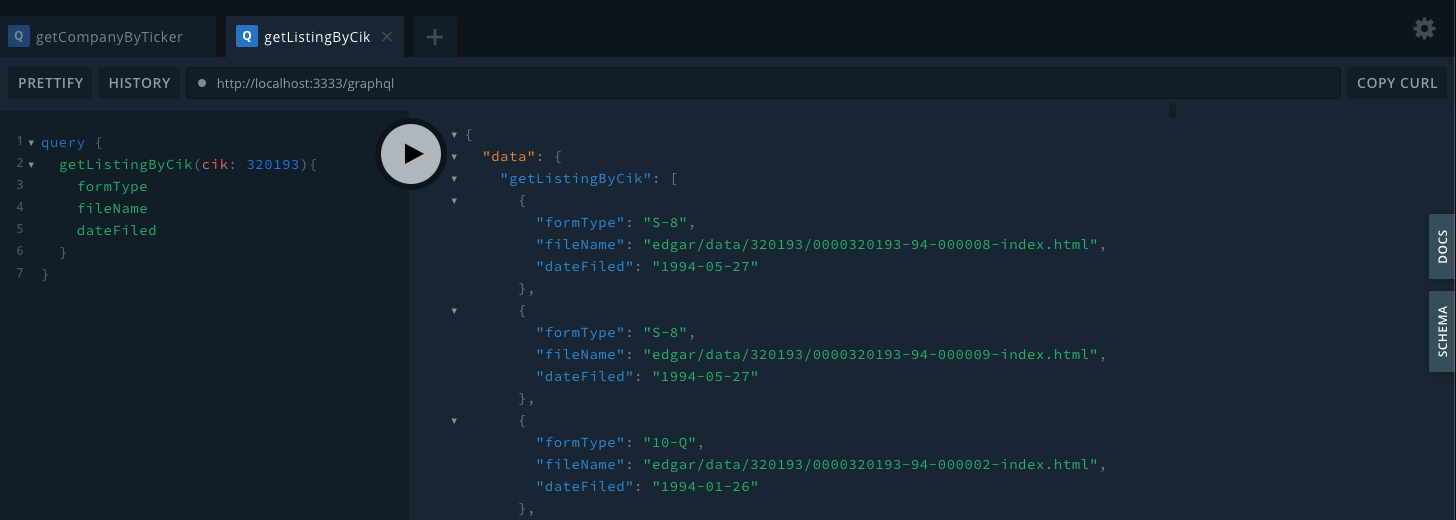

- [x] Find a company by trading symbol and list available filings

- [x] View specific filing in HTML format

How did I go about solving this challenge?

I started with looking into how the filling data is recorded in EDGAR. I located the full index filings of each quarter for that period along with how they are mapped by year and quarter.

I opted for a scan and retrieve approach vs. hard coding known paths in case of an API endpoint change. Once the list of urls are retrieved, the next step is to fetch a total of 112 indexes, ~ 25mb per index. Sec.gov has an API rate limit of 10tps and will ban IP for 10min if you exceed this. I’ve created a concurrent connection limiter to prevent a ban.

Extracting form data was easy, retaining it wasn’t. After discovering that each index could exceed +300,000 records, writing the output to a dictionary that uses CIK as the key that holds an array isn’t ideal. Heap Memory ran out after only extracting 30% of all indexes. Allocating memory within the main execution thread for storing until all fillings isn't an option.

I resolved the issue by extending the ETL pipeline with an async function that commits the data to DB. Running 10 ETL pipelines concurrently speeds up the process and only takes 12 minutes to populate a locally hosted PostgreSQL DB.

Environment Variables Required

POSTGRES_USERNAME

POSTGRES_PASSWORD

POSTGRES_DB

POSTGRES_HOST

PORTInstallation

$ npm i sec2goCrawler Setup

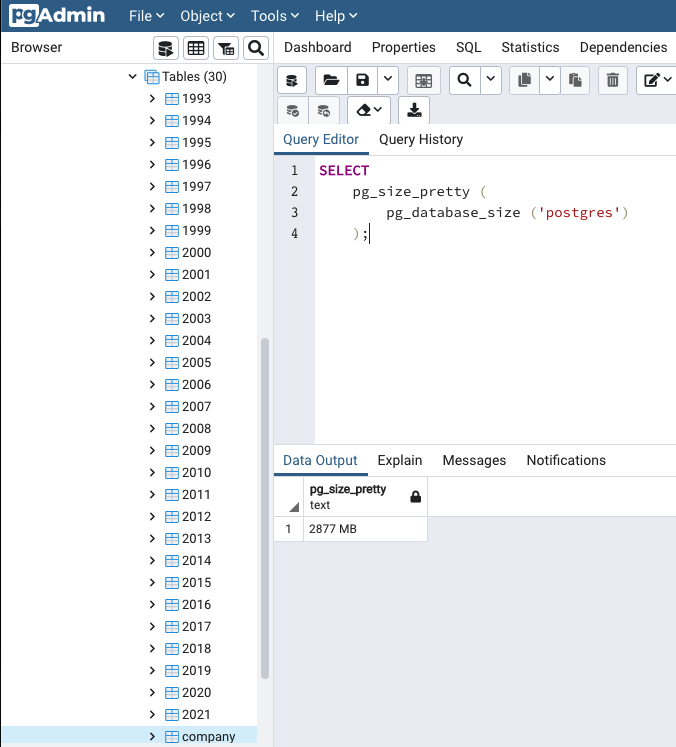

The crawler component is used to retrieve, process and save the SEC EDGAR full index This process takes around 12minutes to complete and requires a mysql or postgres DB and an allocation of atleast 2.7 gb of diskspace.

const { Crawler } = require('sec2go');

const crawler = new Crawler();GraphQL API Setup

const {PORT} = process.env

const { Orm } = require('sec2go');

const {typeDefs, resolvers} = new Orm();

const app = require('express')();

const bodyParser = require('body-parser');

app.use(bodyParser.json());

const { ApolloServer } = require('apollo-server-express');

const server = new ApolloServer({typeDefs, resolvers});

server.applyMiddleware({ app });

app.listen({port:PORT}, () => {

console.log(`🚀 GraphQL Server ready at localhost:${PORT}/graphql`);

});Technical Documentation

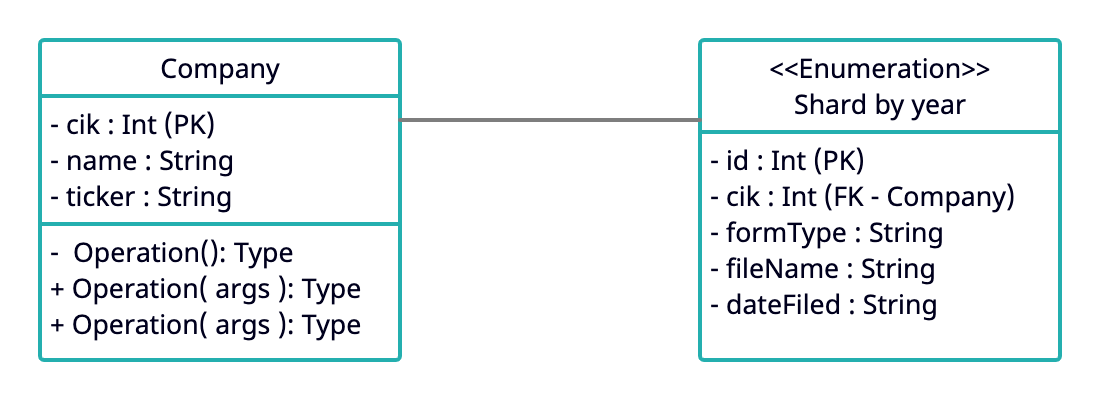

UML Class Diagram

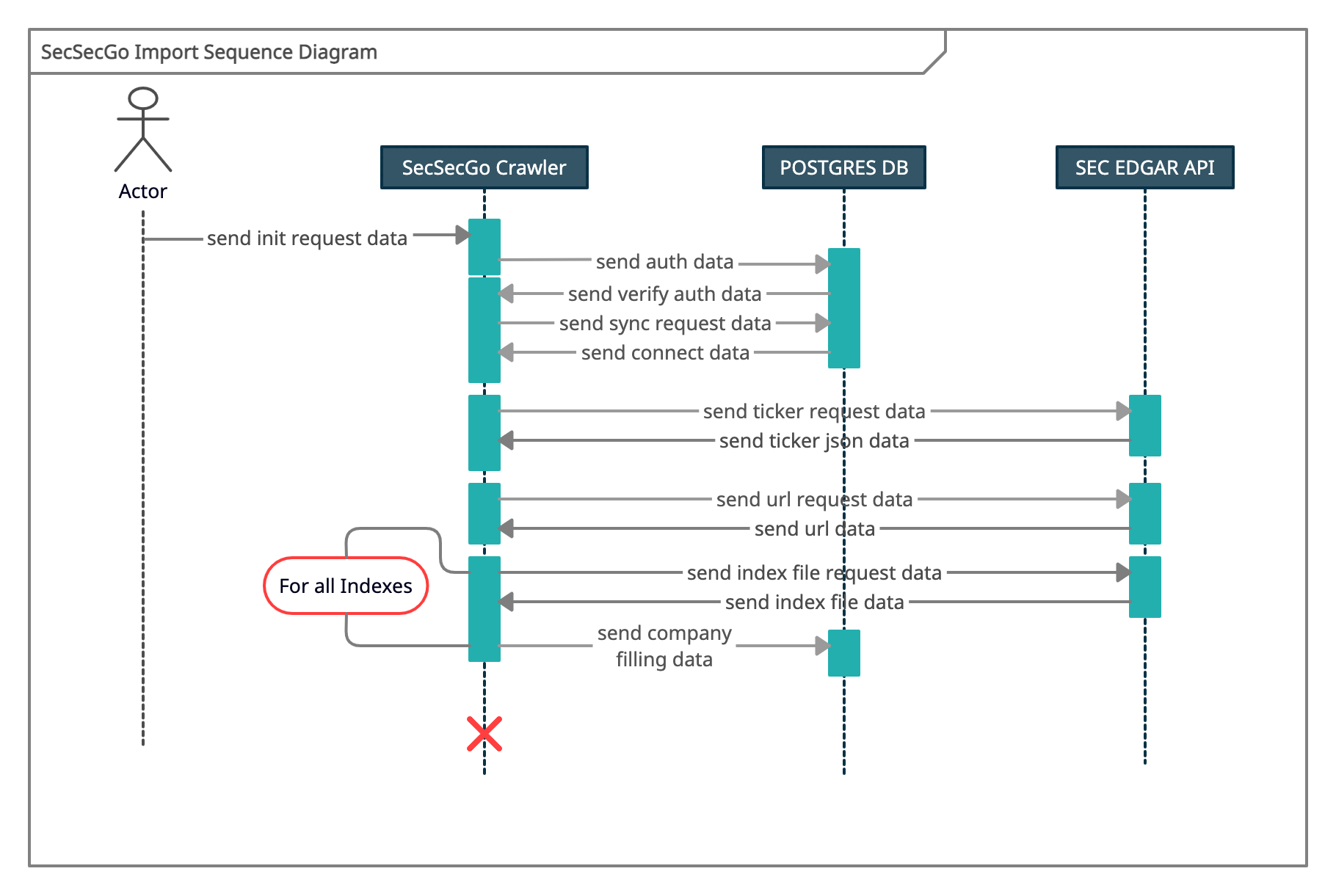

Sequence Diagram

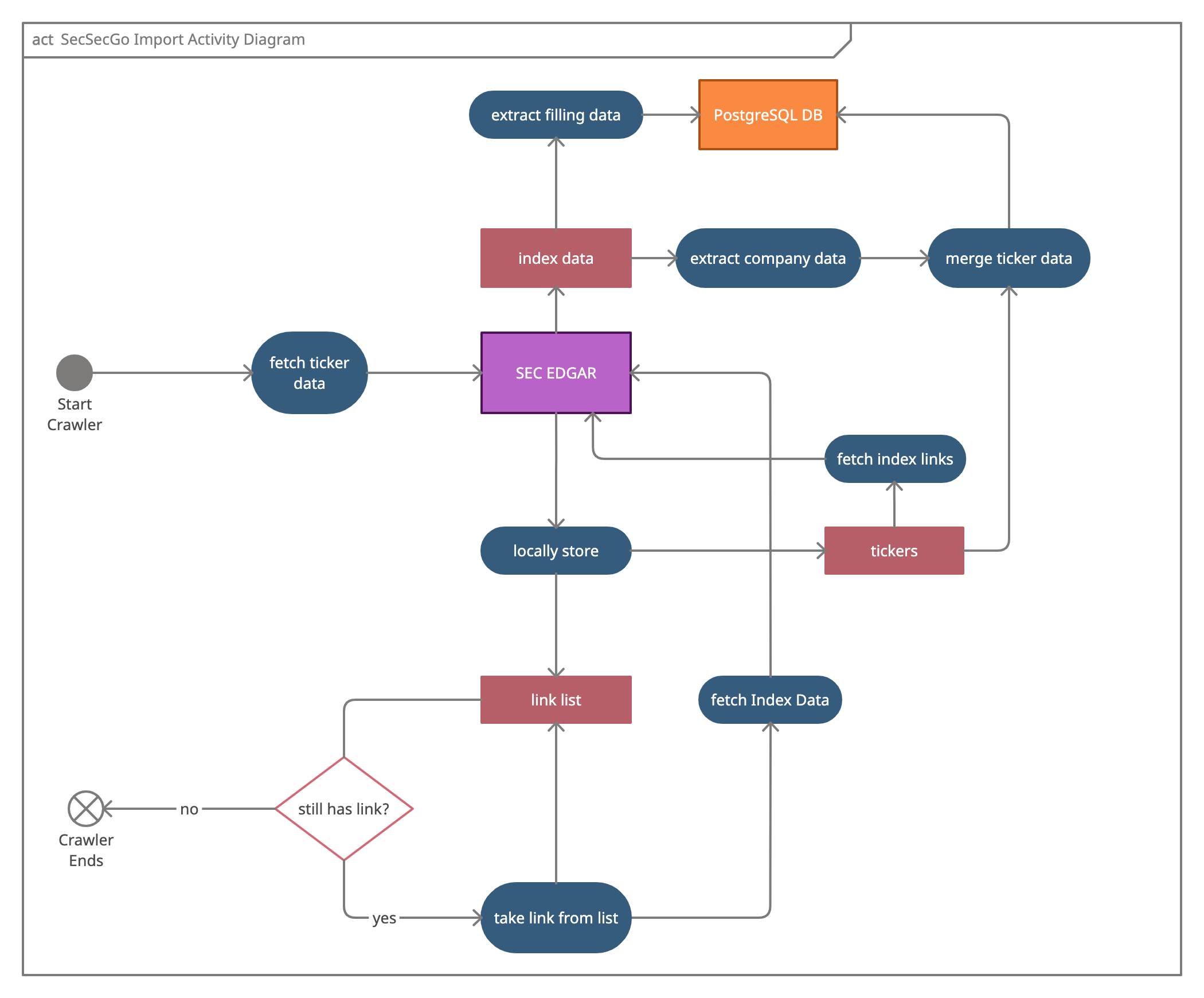

UML Activity Diagram

API Screenshots