reverse-proxy-rate-limiter

v0.1.2

Published

Reverse proxy written in Node.js that limits incoming requests based on their origin and the number of active concurrent requests.

Downloads

8

Maintainers

Readme

reverse-proxy-rate-limiter

reverse-proxy-rate-limiter is a reverse proxy written in Node.js that protects the service behind it from being overloaded. It limits incoming requests based on their origin and the number of active concurrent requests while ensuring that the service’s capacity is fully utilized.

Usecase

Web services are often used by very different types of clients. If we imagine a service storing presentations, there could be users loading presentations in their browser and perhaps a search service that would like to index the content of the presentations. In this scenario, the requests from the user wanting to present is much more important than the one from the search service - the latter can wait and come back if it couldn’t retrieve presentations, but the user cannot.

The reverse-proxy-rate-limiter helps with managing such a situation. Standing between the clients and the service providing presentations, it ensures that the search service won’t consume capacity that is needed for serving requests from the users. The specific capacity that is required for serving user traffic is computed dynamically. If users would stop requesting presentations from this service, the search service would automatically be enabled to consume all of the service’s capacity.

Rate-limiting concept

Buckets

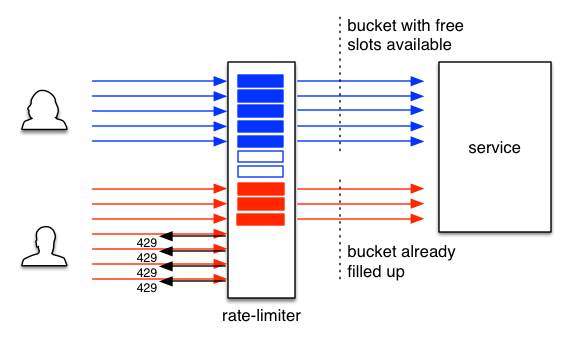

The reverse-proxy-rate-limiter prioritizes requests from different clients by assigning them to different buckets (shown as red and blue slots within the rate-limiter in the figure above) based on HTTP headers. A bucket is basically a set of limitation rules that we want to apply on traffic that we mapped to a bucket. Based on those rules and the active requests both in the bucket and overall service, a request will be forwarded to the service or rejected (indicated with the 429 status code in the figure). Buckets expand beyond their designated capacity if other buckets are not fully consuming their capacity. For example, if the blue client above would stop sending requests, the red client would eventually be able to fill most of the slots so that none of its requests would be rejected.

Concurrent Active Requests

Many rate-limiting solutions reject requests based on the number of incoming requests. This is not an effective measure if the service is handling requests that take different amounts of time to be processed. 100 requests/second might be fine if the requests are processed within 10ms, but not so much if they take 1000ms each.

Instead of this approach, the reverse-proxy-rate-limiter limits incoming traffic based on the number of requests that are already handled concurrently by the backend service.

Getting started

reverse-proxy-rate-limiter can be installed in a few seconds, let's check out our screencast about it:

At first clone the rate-limiter github repository:

$ git clone [email protected]:prezi/reverse-proxy-rate-limiter.gitThen install the needed npm packages:

$ cd reverse-proxy-rate-limiter

$ npm installYou can start the rate-limiter with a sample settings file that can be found in the examples directory:

$ cat examples/settings.sample.json

{

"serviceName": "authservice",

"listenPort": 7000,

"forwardPort": 7001,

"forwardHost": "localhost",

"configRefreshInterval": 60000,

"configEndpoint": "file:./examples/limits-config.sample.json"

}The interesting information is that the rate-limiter will be listening on port 7000, and will forward the http requests to localhost:7001.

The configuration of the limitation will be read from a file in this case: examples/limits-config.sample.json:

$ cat examples/limits-config.sample.json

{

"version": 1,

"max_requests": 2,

"buffer_ratio": 0,

"buckets": [

{

"name": "default"

}

]

}There is no special rule added, but the rate-limiter won't allow more than 2 requests to be served simultaneously.

Let's start the rate-limiter:

$ node start-rate-limiter.js -c examples/settings.sample.jsonWe can start a sample service in the background, listening on the port 7001, which will reply with a JSON that contains all the headers it got from the rate-limiter:

$ node examples/mock-server.jsWe can send a http request to the rate-limiter now:

$ curl localhost:7000/test/

Hello ratelimiter!

/test/

{

"accept": "*/*",

"host": "localhost:7000",

"user-agent": "curl/7.30.0",

"connection": "close",

"x-ratelimiter-bucket": "default"

}The x-ratelimiter-bucket is a special header the rate-limiter sets to the forwarded request to give some information to the service in the background about the traffic.

Let's try to overload the rate-limiter to start rejecting requests. It's not hard, if you send the request to the /sleep5secs/ url, the mock-server won't answer for 5 seconds. With this we can easily send more than 2 requests:

$ curl localhost:7000/sleep5secs/ &

[1] 25948

$ curl localhost:7000/sleep5secs/ &

[2] 25954

$ curl localhost:7000/sleep5secs/ &

[3] 25960

Request has been rejected by the rate limiter[3] + 25960 done

$ curl localhost:7000/sleep5secs/ &

[3] 25966

Request has been rejected by the rate limiter[3] + 25966 done

$ curl localhost:7000/sleep5secs/ &

[3] 25972

Request has been rejected by the rate limiter[3] + 25972 doneYou can see, that the rate-limiter didn't allow the 3rd request to go to the service. This is basically the gist of how the rate-limiter will protect your service.

Configuration

There are two types of configuration in the context of the reverse-proxy-rate-limiter. One configures the reverse proxy itself, the other one configures the buckets and their limits. To avoid confusion, we refer to the former as “settings” and the latter as “limits configuration”.

Settings

There are 5 levels of sources for the settings (all but the first one optional). From lowest to highest priority:

- Default settings values hard-coded in

lib/reverse-proxy-rate-limiter/settings.js $PWD/config/default.jsonif it exists$PWD/config/$NODE_ENV.jsonif it exists- The second parameter to

lib/reverse-proxy-rate-limiter/settings.js#loadis an optional function which gets called with aConfigBuilderinstance as its only argument. It can makeadd{Obj,File,Dir}calls on it to add any custom config sources. - The first parameter to

lib/reverse-proxy-rate-limiter/settings.js#loadis an optional string which is the path to a settings file. If called fromlib/reverse-proxy-rate-limiter/settings.js#init(used bystart-rate-limiter.js), the command-line argument--config(or just-c) is passed in here.

Limits Configuration

The limits configuration is periodically loaded by the reverse-proxy-rate-limiter from a file or the backend service behind the rate-limiter. The exact path or URL is determined in the settings (it defaults to <listenHost>:<listenPort>/rate-limiter). An example limits configuration can be found here.

Events

The rate-limiter can be run directly (node start-rate-limiter.js -c settings.sample.json) or within another project that depends on it. In the latter case, you can listen to events - to implement monitoring, for example - like this:

var rateLimiter = require("reverse-proxy-rate-limiter");

var rl = rateLimiter.createRateLimiter(settings);

rl.proxyEvent.on('rejected', function(req, errorCode, reason) {

console.log('Rejected: ', reason);

});The following events are emitted:

forwarded, params: req - when an incoming request was forwarded to the backend serviceserved, params: req, res - when a forwarded request was successfully served to the clientfailed, params: err, req, res - when the request failed between the rate-limiter and the backend servicerejected, params: req, errorCode, reason - when an incoming request was rejectedrejectRequest, params: req, res, errorCode, reason - allows custom handling of a rejected request. It falls back to a default handler that returns a429response if the event is not handled.

Naught integration

The reverse-proxy-rate-limiter can be wrapped with naught so it supports zero downtime deployment and automatic restarts if the nodejs process dies.

Contribution

Pull requests are very welcome. For discussions, please head over to the mailing list.

We have a JSHint configuration in place that can help with polishing your code.

License

reverse-proxy-rate-limiter is available under the Apache License, Version 2.0.