promptfoo_sql

v0.17.2

Published

LLM eval & testing toolkit

Downloads

1

Readme

promptfoo: test your prompts

promptfoo is a tool for testing and evaluating LLM prompt quality.

With promptfoo, you can:

- Systematically test prompts against predefined test cases

- Evaluate quality and catch regressions by comparing LLM outputs side-by-side

- Speed up evaluations with caching and concurrent tests

- Score outputs automatically by defining "expectations"

- Use as a CLI, or integrate into your workflow as a library

- Use OpenAI models, open-source models like Llama and Vicuna, or integrate custom API providers for any LLM API

The goal: test-driven prompt engineering, rather than trial-and-error.

» View full documentation «

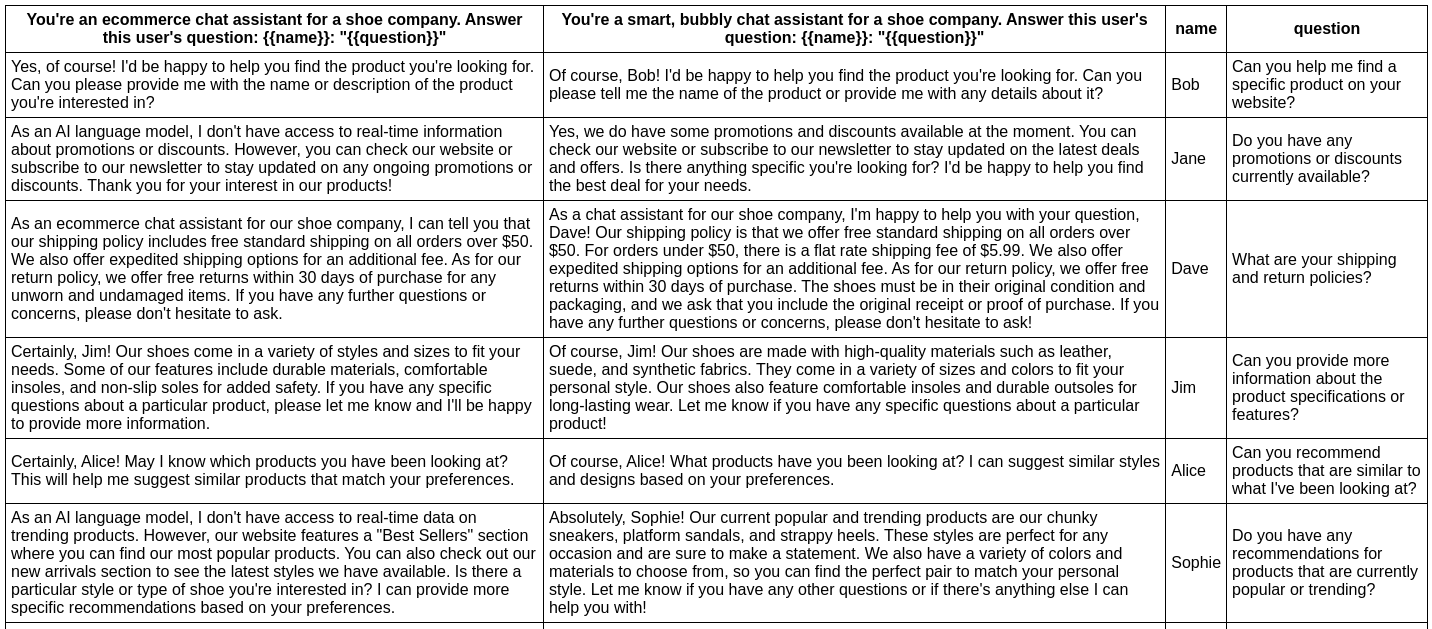

promptfoo produces matrix views that let you quickly evaluate outputs across many prompts.

Here's an example of a side-by-side comparison of multiple prompts and inputs:

It works on the command line too:

Workflow

Start by establishing a handful of test cases - core use cases and failure cases that you want to ensure your prompt can handle.

As you explore modifications to the prompt, use promptfoo eval to rate all outputs. This ensures the prompt is actually improving overall.

As you collect more examples and establish a user feedback loop, continue to build the pool of test cases.

Usage

To get started, run this command:

npx promptfoo initThis will create some placeholders in your current directory: prompts.txt and promptfooconfig.yaml.

After editing the prompts and variables to your liking, run the eval command to kick off an evaluation:

npx promptfoo evalConfiguration

The YAML configuration format runs each prompt through a series of example inputs (aka "test case") and checks if they meet requirements (aka "assert").

See the Configuration docs for a detailed guide.

prompts: [prompt1.txt, prompt2.txt]

providers: [openai:gpt-3.5-turbo, localai:chat:vicuna]

defaultTest:

assert:

tests:

- description: 'Test translation to French'

vars:

language: French

input: Hello world

assert:

- type: contains-json

- type: javascript

value: output.startsWith('Bonjour')

- description: 'Test translation to German'

vars:

language: German

input: How's it going?

assert:

- type: similar

value: was geht

threshold: 0.6 # cosine similarity

- type: llm-rubric

value: does not describe self as an AI, model, or chatbotSupported assertion types

See Test assertions for full details.

| Assertion Type | Returns true if... |

| --------------- | ------------------------------------------------------------------------- |

| equals | output matches exactly |

| contains | output contains substring |

| icontains | output contains substring, case insensitive |

| regex | output matches regex |

| contains-some | output contains some in list of substrings |

| contains-all | output contains all list of substrings |

| is-json | output is valid json |

| contains-json | output contains valid json |

| javascript | provided Javascript function validates the output |

| webhook | provided webhook returns {pass: true} |

| similar | embeddings and cosine similarity are above a threshold |

| llm-rubric | LLM output matches a given rubric, using a Language Model to grade output |

| rouge-n | Rouge-N score is above a given threshold |

Every test type can be negated by prepending not-. For example, not-equals or not-regex.

Tests from spreadsheet

Some people prefer to configure their LLM tests in a CSV. In that case, the config is pretty simple:

prompts: [prompts.txt]

providers: [openai:gpt-3.5-turbo]

tests: tests.csvSee example CSV.

Command-line

If you're looking to customize your usage, you have a wide set of parameters at your disposal.

| Option | Description |

| ----------------------------------- | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| -p, --prompts <paths...> | Paths to prompt files, directory, or glob |

| -r, --providers <name or path...> | One of: openai:chat, openai:completion, openai:model-name, localai:chat:model-name, localai:completion:model-name. See API providers |

| -o, --output <path> | Path to output file (csv, json, yaml, html) |

| --tests <path> | Path to external test file |

| -c, --config <path> | Path to configuration file. promptfooconfig.js/json/yaml is automatically loaded if present |

| -j, --max-concurrency <number> | Maximum number of concurrent API calls |

| --table-cell-max-length <number> | Truncate console table cells to this length |

| --prompt-prefix <path> | This prefix is prepended to every prompt |

| --prompt-suffix <path> | This suffix is append to every prompt |

| --grader | Provider that will conduct the evaluation, if you are using LLM to grade your output |

After running an eval, you may optionally use the view command to open the web viewer:

npx promptfoo viewExamples

Prompt quality

In this example, we evaluate whether adding adjectives to the personality of an assistant bot affects the responses:

npx promptfoo eval -p prompts.txt -r openai:gpt-3.5-turbo -t tests.csvThis command will evaluate the prompts in prompts.txt, substituing the variable values from vars.csv, and output results in your terminal.

You can also output a nice spreadsheet, JSON, YAML, or an HTML file:

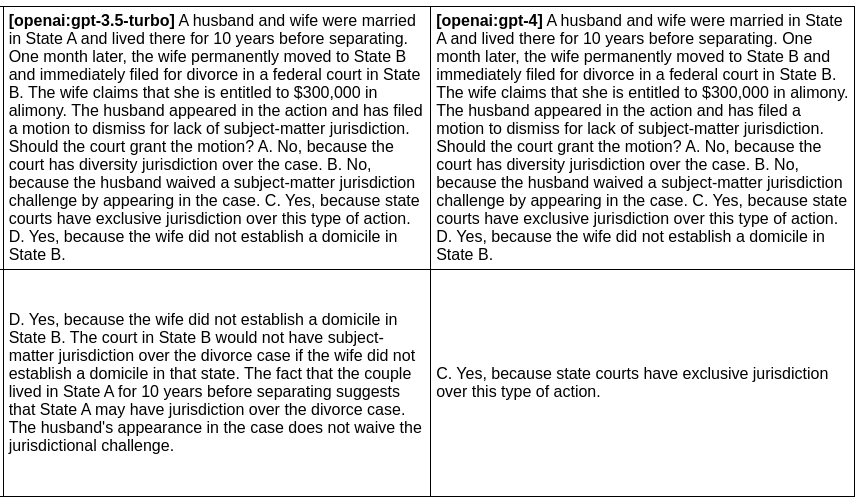

Model quality

In the next example, we evaluate the difference between GPT 3 and GPT 4 outputs for a given prompt:

npx promptfoo eval -p prompts.txt -r openai:gpt-3.5-turbo openai:gpt-4 -o output.htmlProduces this HTML table:

Usage (node package)

You can also use promptfoo as a library in your project by importing the evaluate function. The function takes the following parameters:

testSuite: the Javascript equivalent of the promptfooconfig.yamlinterface TestSuiteConfig { providers: string[]; // Valid provider name (e.g. openai:gpt-3.5-turbo) prompts: string[]; // List of prompts tests: string | TestCase[]; // Path to a CSV file, or list of test cases defaultTest?: Omit<TestCase, 'description'>; // Optional: add default vars and assertions on test case outputPath?: string; // Optional: write results to file } interface TestCase { description?: string; vars?: Record<string, string>; assert?: Assertion[]; prompt?: PromptConfig; grading?: GradingConfig; } interface Assertion { type: string; value?: string; threshold?: number; // For similarity assertions provider?: ApiProvider; // For assertions that require an LLM provider }options: misc options related to how the tests are runinterface EvaluateOptions { maxConcurrency?: number; showProgressBar?: boolean; generateSuggestions?: boolean; }

Example

promptfoo exports an evaluate function that you can use to run prompt evaluations.

import promptfoo from 'promptfoo';

const results = await promptfoo.evaluate({

prompts: ['Rephrase this in French: {{body}}', 'Rephrase this like a pirate: {{body}}'],

providers: ['openai:gpt-3.5-turbo'],

tests: [

{

vars: {

body: 'Hello world',

},

},

{

vars: {

body: "I'm hungry",

},

},

],

});This code imports the promptfoo library, defines the evaluation options, and then calls the evaluate function with these options.

See the full example here, which includes an example results object.

Configuration

- Main guide: Learn about how to configure your YAML file, setup prompt files, etc.

- Configuring test cases: Learn more about how to configure expected outputs and test assertions.

Installation

API Providers

We support OpenAI's API as well as a number of open-source models. It's also to set up your own custom API provider. See Provider documentation for more details.

Development

Contributions are welcome! Please feel free to submit a pull request or open an issue.

promptfoo includes several npm scripts to make development easier and more efficient. To use these scripts, run npm run <script_name> in the project directory.

Here are some of the available scripts:

build: Transpile TypeScript files to JavaScriptbuild:watch: Continuously watch and transpile TypeScript files on changestest: Run test suitetest:watch: Continuously run test suite on changes

» View full documentation «

for node newbies like me

# install node virtual env

sudo pip install nodeenv

# create a virtual env.

nodeenv env

# activate the virtual env.

. env/bin/activate

# install typescript

npm install typescript -g

# install dependencies in package.json

npm install

# build

npm run build

# test

npm run test