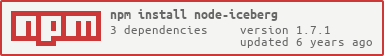

node-iceberg

v1.7.1

Published

nodejs lightweight scraper/crawler

Downloads

23

Maintainers

Readme

node-iceberg

A lightweight Node.js tree-based scraper/crawler. No more callbacks! Just async/await.

Installation

Download and install via npm package manager (stable):

npm install node-iceberg --saveOr clone fresh code directly from git:

git clone https://github.com/manuasir/node-iceberg.git

cd node-iceberg

npm installUsage

This package allows to get filtered DOM elements from URLs throw customized iterators, and it works mainly in two ways:

- Scraper mode: Get elements using customized selectors.

// Example: download all links from a Blogspot URL. Use inside an 'async' function

const Iceberg = require('node-iceberg')

const getThisDomains = ['mediafire','mega','adf.ly'] // domains we can get

const conf = {

// Iterator: Element that gets you to the next URL to process in blogspot

iteratorElement: {

element: 'a',

cssClass: 'blog-pager-older-link' // optional

},

// Desired data to get extracted from the DOM. Example: Download links

selector: {

element: 'a',

attrib: 'href',

values: getThisDomains // optional

}

}

// Max Level Depth to explore: max blog pages

const maxLevelDepth = 10

const scraper= new Iceberg("http://someblog.blogspot.com")

const results = await scraper.start(maxLevelDepth,conf)

// or load a filter:

const scraper= new Iceberg("http://someblog.blogspot.com")

const conf = scraper.service('blogspot')

const results = await scraper.start(maxLevelDepth,conf)Some websites are allowed to paginate directly from the URL using parameters. Ej: http://url?page=1,2,3... In that case, use it like this: pass only the configuration object and set max page within it:

// Example: get insecure cameras: insecam.org

const Iceberg = require('node-iceberg')

const conf = {

iteratorElement: {

url: url,

iterator: '?page=',

maxPage: 5 // maximum page to explore

}, selector: { // elements we want to get

element: 'img',

attrib: 'src'

}

}

const scraper = new Iceberg("http://insecam.org")

const results = scraper.start(conf).then((results) => { console.log(results) }).catch((err)=>{ throw err })- Crawler mode: Explores ALL links from a URL until the depth threshold is reached. Generates a tree from crawled data. Already explored ways are included only once.

// Warning! this can become a very costful task

const Iceberg = require('node-iceberg')

const crawler = new Iceberg('http://reddit.com')

const conf = crawler.service('crawler')

const maxLevelDepth = 2

const results = crawler.start(maxLevelDepth,conf)then((results) => { console.log(results) }).catch((err)=>{ throw err })Test

If you downloaded this package from NPM, it's already tested. Otherwise you can test it like this:

npm test