mynth-recacheman-mongo

v1.0.5

Published

MongoDB standalone caching library for Node.JS and also cache engine for cacheman

Downloads

13

Readme

This is a fork version of recacheman-mongo which is a fork version of cacheman-mongo with following differences :

- Support for MongoDB v5.6

- Optional expiry for cache data

recacheman-mongo

MongoDB standalone caching library for Node.JS and also cache engine for recacheman.

Instalation

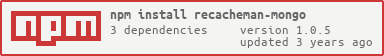

$ npm install recacheman-mongoUsage

var CachemanMongo = require('recacheman-mongo');

var cache = new CachemanMongo();

// set the value

cache.set('my key', { foo: 'bar' }, function (error) {

if (error) throw error;

// get the value

cache.get('my key', function (error, value) {

if (error) throw error;

console.log(value); //-> {foo:"bar"}

// delete entry

cache.del('my key', function (error){

if (error) throw error;

console.log('value deleted');

});

});

});API

CachemanMongo([options])

Create cacheman-mongo instance. options are mongo valid options including port, host, database and collection.

var options = {

port: 9999,

host: '127.0.0.1',

username: 'beto',

password: 'my-p@ssw0rd'

database: 'my-cache-db',

collection: 'my-collection',

compression: false

};

var cache = new CachemanMongo(options);You can also pass a valid mongodb connection string as first arguments like this:

var options = {

collection: 'account'

};

var cache = new CachemanMongo('mongodb://127.0.0.1:27017/blog', options);Or pass a mongodb db instance directly as client:

MongoClient.connect('mongodb://127.0.0.1:27017/blog', function (err, db) {

var cache = new CachemanMongo(db, { collection: 'account' });

// or

cache = new CachemanMongo({ client: db, collection: 'account' });

});Cache Value Compression

MongoDB has a 16MB document size limit, and, currently, does not have built in support for compression. You can enable cache value compression for large Buffers by setting the compression options to true, this will use the native node zlib module to compress with gzip. It only compresses Buffers because of the complexity in correctly decompressing and deserializing the variety of data structures and string encodings.

Thanks to @Jared314 for adding this feature.

var cache = new Cache({ compression: true });

cache.set('test1', Buffer.from("something big"), function (err) {...});

cache.set(key, value, [ttl, [fn]])

Stores or updates a value.

cache.set('foo', { a: 'bar' }, function (err, value) {

if (err) throw err;

console.log(value); //-> {a:'bar'}

});Or add a TTL(Time To Live) in seconds like this:

// key will expire in 60 seconds

cache.set('foo', { a: 'bar' }, 60, function (err, value) {

if (err) throw err;

console.log(value); //-> {a:'bar'}

});cache.get(key, fn)

Retreives a value for a given key, if there is no value for the given key a null value will be returned.

cache.get('foo', function (err, value) {

if (err) throw err;

console.log(value);

});cache.del(key, [fn])

Deletes a key out of the cache.

cache.del('foo', function (err) {

if (err) throw err;

// foo was deleted

});cache.clear([fn])

Clear the cache entirely, throwing away all values.

cache.clear(function (err) {

if (err) throw err;

// cache is now clear

});Run tests

$ make testTODO

- Modify the library to use async await instead of promises for better readability

- Review the OPTIONS_LIST - most of the options there are no longer supported while instantiating a mongodb client, we should alter them to match the actual possible values. For our current purposes we dont need to add additional options so we are covered but from extendability and code hygiene perspective we should either alter it to match the version it supports or get rid of it

- Convert to TS so its in accordance with other mynth repos