mongoosastic-fixbug

v1.0.2

Published

A mongoose plugin that indexes models into elastic search (This is fix bug version of mongoosastic)

Readme

Mongoosastic

Mongoosastic is a mongoose plugin that can automatically index your models into elasticsearch.

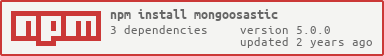

Installation

The latest version of this package will be as close as possible to the latest elasticsearch and mongoose packages.

npm install -S mongoosasticSetup

Model.plugin(mongoosastic, options)

Options are:

index- the index in Elasticsearch to use. Defaults to the pluralization of the model name.type- the type this model represents in Elasticsearch. Defaults to the model name.esClient- an existing ElasticsearchClientinstance.hosts- an array hosts Elasticsearch is running on.host- the host Elasticsearch is running onport- the port Elasticsearch is running onauth- the authentication needed to reach Elasticsearch server. In the standard format of 'username:password'protocol- the protocol the Elasticsearch server uses. Defaults to httphydrate- whether or not to lookup results in mongodb beforehydrateOptions- options to pass into hydrate functionbulk- size and delay options for bulk indexingfilter- the function used for filtered indexingtransform- the function used to transform serialized document before indexingpopulate- an Array of Mongoose populate options objectsindexAutomatically- allows indexing after model save to be disabled for when you need finer control over when documents are indexed. Defaults to truecustomProperties- an object detailing additional properties which will be merged onto the type's default mapping whencreateMappingis called.saveOnSynchronize- triggers Mongoose save (and pre-save) method when synchronizing a collection/index. Defaults to true

To have a model indexed into Elasticsearch simply add the plugin.

var mongoose = require('mongoose')

, mongoosastic = require('mongoosastic')

, Schema = mongoose.Schema

var User = new Schema({

name: String

, email: String

, city: String

})

User.plugin(mongoosastic)This will by default simply use the pluralization of the model name as the index while using the model name itself as the type. So if you create a new User object and save it, you can see it by navigating to http://localhost:9200/users/user/_search (this assumes Elasticsearch is running locally on port 9200).

The default behavior is all fields get indexed into Elasticsearch. This can be a little wasteful especially considering that

the document is now just being duplicated between mongodb and

Elasticsearch so you should consider opting to index only certain fields by specifying es_indexed on the

fields you want to store:

var User = new Schema({

name: {type:String, es_indexed:true}

, email: String

, city: String

})

User.plugin(mongoosastic)In this case only the name field will be indexed for searching.

Now, by adding the plugin, the model will have a new method called

search which can be used to make simple to complex searches. The search

method accepts standard Elasticsearch query DSL

User.search({

query_string: {

query: "john"

}

}, function(err, results) {

// results here

});

To connect to more than one host, you can use an array of hosts.

MyModel.plugin(mongoosastic, {

hosts: [

'localhost:9200',

'anotherhost:9200'

]

})Also, you can re-use an existing Elasticsearch Client instance

var esClient = new elasticsearch.Client({host: 'localhost:9200'});

MyModel.plugin(mongoosastic, {

esClient: esClient

})Indexing

Saving a document

The indexing takes place after saving in mongodb and is a deferred process. One can check the end of the indexation by catching the es-indexed event.

doc.save(function(err){

if (err) throw err;

/* Document indexation on going */

doc.on('es-indexed', function(err, res){

if (err) throw err;

/* Document is indexed */

});

});Removing a document

Removing a document, or unindexing, takes place when a document is removed by calling .remove() on a mongoose Document instance.

One can check the end of the unindexing by catching the es-removed event.

doc.remove(function(err) {

if (err) throw err;

/* Document unindexing in the background */

doc.on('es-removed', function(err, res) {

if (err) throw err;

/* Docuemnt is unindexed */

});

});Note that use of Model.remove does not involve mongoose documents as outlined in the documentation. Therefore, the following will not unindex the document.

MyModel.remove({ _id: doc.id }, function(err) {

/* doc remains in Elasticsearch cluster */

});Indexing Nested Models

In order to index nested models you can refer following example.

var Comment = new Schema({

title: String

, body: String

, author: String

})

var User = new Schema({

name: {type:String, es_indexed:true}

, email: String

, city: String

, comments: {type:[Comment], es_indexed:true}

})

User.plugin(mongoosastic)Elasticsearch Nested datatype

Since the default in Elasticsearch is to take arrays and flatten them into objects,

it can make it hard to write queries where you need to maintain the relationships

between objects in the array, per .

The way to change this behavior is by changing the Elasticsearch type from object

(the mongoosastic default) to nested

var Comment = new Schema({

title: String

, body: String

, author: String

})

var User = new Schema({

name: {type: String, es_indexed: true}

, email: String

, city: String

, comments: {

type:[Comment],

es_indexed: true,

es_type: 'nested',

es_include_in_parent: true

}

})

User.plugin(mongoosastic)Indexing Mongoose References

In order to index mongoose references you can refer following example.

var Comment = new Schema({

title: String

, body: String

, author: String

});

var User = new Schema({

name: {type:String, es_indexed:true}

, email: String

, city: String

, comments: {type: Schema.Types.ObjectId, ref: 'Comment',

es_schema: Comment, es_indexed:true, es_select: 'title body'}

})

User.plugin(mongoosastic, {

populate: [

{path: 'comments', select: 'title body'}

]

})In the schema you'll need to provide es_schema field - the referenced schema.

By default every field of the referenced schema will be mapped. Use es_select field to pick just specific fields.

populate is an array of options objects you normally pass to

Model.populate.

Indexing An Existing Collection

Already have a mongodb collection that you'd like to index using this plugin? No problem! Simply call the synchronize method on your model to open a mongoose stream and start indexing documents individually.

var BookSchema = new Schema({

title: String

});

BookSchema.plugin(mongoosastic);

var Book = mongoose.model('Book', BookSchema)

, stream = Book.synchronize()

, count = 0;

stream.on('data', function(err, doc){

count++;

});

stream.on('close', function(){

console.log('indexed ' + count + ' documents!');

});

stream.on('error', function(err){

console.log(err);

});You can also synchronize a subset of documents based on a query!

var stream = Book.synchronize({author: 'Arthur C. Clarke'})As well as specifying synchronization options

var stream = Book.synchronize({}, {saveOnSynchronize: true})Options are:

saveOnSynchronize- triggers Mongoose save (and pre-save) method when synchronizing a collection/index. Defaults to globalsaveOnSynchronizeoption

Bulk Indexing

You can also specify bulk options with mongoose which will utilize Elasticsearch's bulk indexing api. This will cause the synchronize function to use bulk indexing as well.

Mongoosastic will wait 1 second (or specified delay) until it has 1000 docs (or specified size) and then perform bulk indexing.

BookSchema.plugin(mongoosastic, {

bulk: {

size: 10, // preferred number of docs to bulk index

delay: 100 //milliseconds to wait for enough docs to meet size constraint

}

});Filtered Indexing

You can specify a filter function to index a model to Elasticsearch based on some specific conditions.

Filtering function must return True for conditions that will ignore indexing to Elasticsearch.

var MovieSchema = new Schema({

title: {type: String},

genre: {type: String, enum: ['horror', 'action', 'adventure', 'other']}

});

MovieSchema.plugin(mongoosastic, {

filter: function(doc) {

return doc.genre === 'action';

}

});Instances of Movie model having 'action' as their genre will not be indexed to Elasticsearch.

Indexing On Demand

You can do on-demand indexes using the index function

Dude.findOne({name:'Jeffrey Lebowski', function(err, dude){

dude.awesome = true;

dude.index(function(err, res){

console.log("egads! I've been indexed!");

});

});The index method takes 2 arguments:

options(optional) - {index, type} - the index and type to publish to. Defaults to the standard index and type that the model was setup with.callback- callback function to be invoked when document has been indexed.

Note that indexing a model does not mean it will be persisted to mongodb. Use save for that.

Unindexing on demand

You can remove a document from the Elasticsearch cluster by using the unIndex function.

doc.unIndex(function(err) {

console.log("I've been removed from the cluster :(");

});The unIndex method takes 2 arguments:

options(optional) - {index, type} - the index and type to publish to. Defaults to the standard index and type that the model was setup with.callback- callback function to be invoked when model has been unindexed.

Truncating an index

The static method esTruncate will delete all documents from the associated index. This method combined with synchronize() can be useful in case of integration tests for example when each test case needs a cleaned up index in Elasticsearch.

GarbageModel.esTruncate(function(err){...});Mapping

Schemas can be configured to have special options per field. These match with the existing field mapping configurations defined by Elasticsearch with the only difference being they are all prefixed by "es_".

So for example. If you wanted to index a book model and have the boost for title set to 2.0 (giving it greater priority when searching) you'd define it as follows:

var BookSchema = new Schema({

title: {type:String, es_boost:2.0}

, author: {type:String, es_null_value:"Unknown Author"}

, publicationDate: {type:Date, es_type:'date'}

});

This example uses a few other mapping fields... such as null_value and type (which overrides whatever value the schema type is, useful if you want stronger typing such as float).

There are various mapping options that can be defined in Elasticsearch. Check out http://www.elasticsearch.org/guide/reference/mapping/ for more information. Here are examples to the currently possible definitions in mongoosastic:

var ExampleSchema = new Schema({

// String (core type)

string: {type:String, es_boost:2.0},

// Number (core type)

number: {type:Number, es_type:'integer'},

// Date (core type)

date: {type:Date, es_type:'date'},

// Array type

array: {type:Array, es_type:'string'},

// Object type

object: {

field1: {type: String},

field2: {type: String}

},

// Nested type

nested: [SubSchema],

// Multi field type

multi_field: {

type: String,

es_type: 'multi_field',

es_fields: {

multi_field: { type: 'string', index: 'analyzed' },

untouched: { type: 'string', index: 'not_analyzed' }

}

},

// Geo point type

geo: {

type: String,

es_type: 'geo_point'

},

// Geo point type with lat_lon fields

geo_with_lat_lon: {

geo_point: {

type: String,

es_type: 'geo_point',

es_lat_lon: true

},

lat: { type: Number },

lon: { type: Number }

}

geo_shape: {

coordinates : [],

type: {type: String},

geo_shape: {

type:String,

es_type: "geo_shape",

es_tree: "quadtree",

es_precision: "1km"

}

}

// Special feature : specify a cast method to pre-process the field before indexing it

someFieldToCast : {

type: String,

es_cast: function(value){

return value + ' something added';

}

}

});

// Used as nested schema above.

var SubSchema = new Schema({

field1: {type: String},

field2: {type: String}

});Geo mapping

Prior to index any geo mapped data (or calling the synchronize), the mapping must be manualy created with the createMapping (see above).

Notice that the name of the field containing the ES geo data must start by 'geo_' to be recognize as such.

Indexing a geo point

var geo = new GeoModel({

/* … */

geo_with_lat_lon: { lat: 1, lon: 2}

/* … */

});Indexing a geo shape

var geo = new GeoModel({

…

geo_shape:{

type:'envelope',

coordinates: [[3,4],[1,2] /* Arrays of coord : [[lon,lat],[lon,lat]] */

}

…

});Mapping, indexing and searching example for geo shape can be found in test/geo-test.js

For example, one can retrieve the list of document where the shape contain a specific point (or polygon...)

var geoQuery = {

"match_all": {}

}

var geoFilter = {

geo_shape: {

geo_shape: {

shape: {

type: "point",

coordinates: [3,1]

}

}

}

}

GeoModel.search(geoQuery, {filter: geoFilter}, function(err, res) { /* ... */ })Creating Mappings On Demand

Creating the mapping is a one time operation and can be done as follows (using the BookSchema as an example):

var BookSchema = new Schema({

title: {type:String, es_boost:2.0}

, author: {type:String, es_null_value:"Unknown Author"}

, publicationDate: {type:Date, es_type:'date'}

BookSchema.plugin(mongoosastic);

var Book = mongoose.model('Book', BookSchema);

Book.createMapping({

"analysis" : {

"analyzer":{

"content":{

"type":"custom",

"tokenizer":"whitespace"

}

}

}

},function(err, mapping){

// do neat things here

});

This feature is still a work in progress. As of this writing you'll have

to manage whether or not you need to create the mapping, mongoosastic

will make no assumptions and simply attempt to create the mapping. If

the mapping already exists, an Exception detailing such will be

populated in the err argument.

Queries

The full query DSL of Elasticsearch is exposed through the search method. For example, if you wanted to find all people between ages 21 and 30:

Person.search({

range: {

age:{

from:21

, to: 30

}

}

}, function(err, people){

// all the people who fit the age group are here!

});

See the Elasticsearch Query DSL docs for more information.

You can also specify query options like sorts

Person.search({/* ... */}, {sort: "age:asc"}, function(err, people){

//sorted results

});And also aggregations:

Person.search({/* ... */}, {

aggs: {

'names': {

'terms': {

'field': 'name'

}

}

}

}, function(err, results){

// results.aggregations holds the aggregations

});Options for queries must adhere to the javascript elasticsearch driver specs.

Raw queries

A full ElasticSearch query object can be provided to mongoosastic through .esSearch() method.

It can be useful when paging results. The query to be provided wraps the query object provided to .search() method and

accepts the same options:

var rawQuery = {

from: 60,

size: 20,

query: /* query object as in .search() */

};

Model.esSearch(rawQuery, options, cb);For example:

Person.esSearch({

from: 60,

size: 20,

query: {

range: {

age:{

from:21,

to: 30

}

}

}

}, function(err, people){

// only the 61st to 80th ranked people who fit the age group are here!

});Hydration

By default objects returned from performing a search will be the objects as is in Elasticsearch. This is useful in cases where only what was indexed needs to be displayed (think a list of results) while the actual mongoose object contains the full data when viewing one of the results.

However, if you want the results to be actual mongoose objects you can provide {hydrate:true} as the second argument to a search call.

User.search(

{query_string: {query: 'john'}},

{hydrate: true},

function(err, results) {

// results here

});

You can also pass in a hydrateOptions object with information on

how to query for the mongoose object.

User.search(

{query_string: {query: 'john'}},

{

hydrate: true,

hydrateOptions: {select: 'name age'}

},

function(err, results) {

// results here

});

Original ElasticSearch result data can be kept with hydrateWithESResults option. Documents are then enhanced with a

_esResult property

User.search(

{query_string: {query: 'john'}},

{

hydrate: true,

hydrateWithESResults: true,

hydrateOptions: {select: 'name age'}

},

function(err, results) {

// results here

results.hits.hits.forEach(function(result) {

console.log(

'score',

result._id,

result._esResult._score

);

});

});

By default the _esResult._source document is skipped. It can be added with the option hydrateWithESResults: {source: false}.

Note using hydrate will be a degree slower as it will perform an Elasticsearch query and then do a query against mongodb for all the ids returned from the search result.

You can also default this to always be the case by providing it as a plugin option (as well as setting default hydrate options):

var User = new Schema({

name: {type:String, es_indexed:true}

, email: String

, city: String

})

User.plugin(mongoosastic, {hydrate:true, hydrateOptions: {lean: true}})