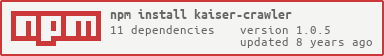

kaiser-crawler

v1.0.5

Published

Node.js module for crawling the web

Downloads

9

Readme

Kaiser - Node.js web crawler

Basic usage

var Crawler = require('kaiser').Crawler;

new Crawler({

uri: 'http://www.google.com'

}).start();Table of contents

Crawler Pipeline

The crawler pipeline is a sequence of steps that kaiser takes when crawling. This overview will provide a high-level description of the steps in the pipeline.

Compose

This step is responsible for taking uris to be crawled and map them to a Resource object,

an object used throughout the pipeline, to store data about the crawled resources.

Fetch

This step is responsible for taking the Resource objects produced in the previous step

and enrich them with content and encoding by fetching them using HTTP requests.

Discover

This step is responsible for taking the enriched Resource objects from the previous step

and find more links that will be crawled as well.

Transform

This step is responsible for taking the Resource objects from the previous step

and applying all sorts of transformations on the content of a Resource.

Cache

This step is responsible for caching the Resource using some caching mechanism.

Basic Pipeline Components

The crawler is supplied with basic components for each step of the pipeline. Each component has its own strategy and goal. This is an overview of each of the basic components, supplied by the crawler, that have a reason for their goal or strategy to change.

Basic Discoverer

Goal Find more links to be crawled. Strategy Links are found using regex to match any url:

- between

srcorhreftagsurlcss property's value (such as ofbackgroundproperty)- valid url that appears in the documents

Basic Transformer

Goal Transform the

contentof aResourceso that all links found will be relative paths to where they would've been if they were stored on the filesystem. Strategy Links relative/absolute paths are calculated depending if the resource of the link was fetched or not. Links are found using regex to match any url:

- between

srcorhreftagsurlcss property's value (such as ofbackgroundproperty)- valid url that appears in the documents

Memory Cache

Goal Store

Resourceobjects in the memory. StrategyResourceobjects are stored in a dictionary using their unique url, for easy retrival later.

Fs Cache

Goal Store

Resourceobjects in the filesystem. StrategyResourceobjects are stored in the correct hierarchy in the filesystem.

Plugin Custom Components

The crawler was built around the idea of pipeline and components so other custom made components could be used instead of the basic one, achieving different goals.

Create a custom component

Each component is a ResourceWorker, meaning it's processing a Resource object in someway.

In-order to create a custom component we would create a class that extends it.

function TextInserterTransformer(crawler, options) {

var self = this;

TextInserterTransformer.init.call(self, crawler, options);

}

// Transformer is already extending ResourceWorker

util.inherits(TextInserterTransformer, Transformer);

TextInserterTransformer.init = function(crawler, options) {

var self = this;

Transformer.init.call(self, crawler);

self.text = options.text;

};

// we must implmenet logic function, this function is called in each step

// it's the core of our component

TextInserterTransformer.prototype.logic = function(resource, callback) {

var self = this;

if (self.canTransform(resource)) {

// do logic

resource.content = self.text + resource.content;

}

// logic needs to call the callback with an error and the processed Reosurce

// to let the pipeline continue to the next step

callback(null, resource);

};

TextInserterTransformer.prototype.canTransform = function(resource) {

var self = this;

return true;

};Classes to extend when creating custom components:

- Composer

- Fetcher

- Discoverer

- Transformer

- Cache

Usage of the created component

To inject the custom component we will acquire it and inject it to the options passed to the crawler.

var Crawler = require('kaiser').Crawler;

var TextInserterTransformer = require('TextInserterTransformer');

// Option A - the crawler will take care of injecting it-self to the component

new Crawler({

uri: 'http://www.google.com',

transformer: new TextInserterTransformer(null, {

text: 'Hello world'

})

}).start();

// Option B - we take care of injecting the crawler to the constructor of the component

var crawler = new Crawler({

uri: 'http://www.google.com'

})

var transformer = new TextInserterTransformer(crawler, {

text: 'Hello world'

});

crawler.transformer = transformer;

crawler.start();Events

crawlstart()- fires when the crawler starts.crawlcomplete()- fires when the crawler finishes.crawlbulkstart(uris, originator)- fires when a bulk of uris are starting the crawling pipeline.urisare the uris that about to start the crawling pipeline,originatoris theResourcethe uris were found in.crawlbulkcomplete(resources, originator)- fires when a bulk of uris have finished the crawling pipeline.resourcesare theResourceobjects that made it successfully through the bulk pipeline,originatoris theResourcetheresourceswere found.composestart(originator, uris)- fires when the compose component starts.urisare the uris to that are about to be composed intoResourceobjects,originatgoris theResourceobject those uris were discovered from.composecomplete(resources)- fires when the compose component finishes.resourcesare theResourceobjects that were composed in the process.fetchstart(resource)- fires when the fetch component starts.resourceis theResourceobject that is about to be fetched.fetchcomplete(resource)- fires when the fetch component finishes.resourceis theResourceobject that was fetched in the process.fetcherror(resource, error)- fires when the basic fetch component can't fetch a resource.resourceis theResourceobject that can't be fetched,erroris the error object (error specific to the implementation of the component logic).discoverstart(resource)- fires when the discover component starts.resourceis theResourceobject that is about to be searched for more uris.discovercomplete(resource, uris)- fires when the discover component finishes.resourceis theResourceobject that was searched for more uris in the process,urisare the found uris.discovererror(resource, uri, error)- fires when the basic discover component can't format a found link.resourceis theResourceobject that theuriwas found in,erroris the error object (error specific to the implementation of the component logic).transformstart(resource)- fires when the fetch transform starts.resourceis theResourceobject that is about to be transformed.transformcomplete(resource)- fires when the transform component finishes.resourceis theResourceobject that was transformed in the process.transformerror(resource, uri, error)- fires when the basic transform component can't replace a found link.resourceis theResourceobject that theuriwas found in,erroris the error object (error specific to the implementation of the component logic).storestart(resource)- fires when the cache component starts.resourceis theResourceobject that is about to be cached.storecomplete(resource)- fires when the cache component finishes.resourceis theResourceobject that was cached in the process.storeerror(resource, error)- fires when the fs cache component can't store a resource in the filysystem.resourceis theResourceobject that can't be saved,erroris the error object (error specific to the implementation of the component logic).

Options

uri- The uri we want to start crawling from.followRobotsTxt- iftrue, follows robots.txt rules. Defaults tofalse.maxDepth- The maximum depth of resource to still crawl links for the same resource domain as the original resource. Defaults to1.maxExternalDepth- The maximum depth of resource to still crawl links for external resource domain as the original resource. Defaults to0.maxFileSize- The maximum file size that is allowed to be crawled. Defaults to1024 * 1024 * 16.maxLinkNumber- The maximum number of links to crawl before stopping. Defaults toNumber.POSITIVE_INFINITY.siteSizeLimit- The maximum total of bytes to be crawled. This number can be passed but not by a large margin, because this check is only being applied before fetching new resources but not after, meaning that if we allow 10 bytes to be fetched and now we're at 9, and the next resource is 10 bytes, then we will fetch a total of 19 bytes and then stop. Defaults tomaxFileSize * maxLinkNumber.maxTimeOverall- The maximum time to crawl. Do no depend on this restriction too much, resources inside the crawler pipeline would continue to be processed, new links found in the discover step won't. Defaults toNumber.POSITIVE_INFINITY.allowedProtocols- Array of allowed protocols to be fetched. Defaults to- http

- https

allowedFileTypes- Array of allowed file types to be fetched. Defaults to- html

- htm

- css

- js

- xml

- gif

- jpg

- jpeg

- png

- tif

- bmp

- eot

- svg

- ttf

- woff

- txt

- htc

- php

- asp

- (blank) for files without extensions

allowedMimeTypes- Regex array of allowed mime types to be fetched. Defaults to- ^text/.+$

- ^application/(?:x-)?javascript$

- ^application/(?:rss|xhtml)(?:+xml)?

- /xml$

- ^image/.+$

- application/octet-stream

disallowedHostnames- Array of blacklist host-names not to be crawled. Defaults to empty array.allowedLinks- Regex array of allowed links to be crawled. Defaults to- .*

composer- Thecomposercomponent to be used. Defaults toBasicComposer.fetcher- Thefetchercomponent to be used. Defaults toBasicFetcher(These are Request options, because it used in the basic fetcher).maxAttempts- The number of attempts to try fetch a resource that could not be fetched.retryDelay- The delay in milliseconds between each retry.maxConcurrentRequests- The maximum number of concurrent requests.proxy- Proxy the requests through this uri.auth- Authentication if needed.acceptCookies- Iftrueuse cookies. Defaults totrue.userAgent- User agent string. Defaults toNode/kaiser <version> (https://github.com/Kashio/kaiser.git)maxSockets- The maximum number of sockets to be used by the underlying http agent.timeout- The timeout in milliseconds to wait for a server to send response headers before aborting requests.strictSSL- Iftrue, requires SSL certificates be valid. Defaults totrue.

discoverer- Thediscoverercomponent to be used. Defaults toBasicDiscoverer.transformer- Thetransformercomponent to be used. Defaults toBasicTransformer.rewriteLinksFileTypes- Array of file types to rewrite only links with these types. Defaults to- html

- htm

- css

- js

- php

- asp

- txt

- (blank) for files without extensions

cache- Thecachecomponent to be used. Defaults toFsCache.rootDir- Path to the directory of where to save the resources ifFsCacheis used as thecachecomponent.

Note the the options passed to the components can also be passed directly to the crawler and they will be injected to the components. These options are valid only for the basic components supplied with kaiser.

Pitfalls

ETIMEDOUT and ESOCKETTIMEDOUT errors

This might be due to the server blocking a lot of requests from your ip in a short amount of time.

A plausible solution for this would be to lower the maxConcurrentRequests option of the basic fetcher.

Another cause might be due to the low number of workers Node.js have for resolving DNS queries.

You can try increasing it:

process.env.UV_THREADPOOL_SIZE = 128;Application is slowed down by the crawler

Because the crawler allows you to plugin components as you wish, some might be hindering the applicaiton by their logic, and might block the event loop. Even the supplied components are somewhat CPU intensive, therefore it is recommended to launch the crawler in a new process.

// crawler_process.js

var Crawler = require('kaiser').Crawler;

new Crawler({

uri: 'http://www.google.com'

}).start();

// app.js

var fork = require('child_process').fork;

fork('./crawler_process');

...Tests

Run tests with

$ npm run test

License

kaiser is licensed under the GPL V3 License.