frontend-ij--web-app

v1.660.2

Published

> This project is a monorepo including the React Web App for [Infojobs.net](https://www.infojobs.net/) and [Infojobs.it](https://www.infojobs.it/), plus some shared dependencies.

Downloads

12

Readme

InfoJobs Web App Monorepo

This project is a monorepo including the React Web App for Infojobs.net and Infojobs.it, plus some shared dependencies.

Table of contents

Getting started

A few things you have to take into consideration before running the project and work on a package.

After cloning the repo you'll have to:

Install global and local dependencies

Verify to use the minimum required versions of Node and NPM or download them from the following link:

❕ Node v18 automatically installs the current minor version of NPM 9

❕ You can verify your current default version by typing in your terminal the following commands:

node -v

/* 18.17.0 */

npm -v

/* 9.6.7 */Login to internal NPM registry

In order to install the internal dependencies we develop at Adevinta, ask your referent to provide you with the NPM registry credentials, then open a terminal tab and login to our internal registry:

npm login

/* Username: */

/* Password: */

/* Email: (this IS public): */Enter the project

Enter the root folder of the project in a new terminal window and install the npm dependencies:

cd frontend-ij--web-appInstall dependencies

Before all, be sure has activated generate package.lock on install:

npm config set package-lock trueOnce you have this prepared, it is time to install the project dependencies:

npm run deps:installAlso, there are several scripts that you can run to manage dependencies:

npm run deps:install: Just install the dependencies. It could update patches versions of packages.npm run deps:updatelock: It will update package-lock.json with latest versions of package.json dependencies, it does not install dependencies.npm run deps:fixlock: It will fix package-lock.json problems, useful after merges. It does not install dependencies.npm run deps:ci: It will install package-lock.json dependencies

In depth package-lock

package-lock.json is automatically generated for any operations where npm modifies either the node_modules tree, or package.json. It describes the exact tree that was generated, such that subsequent installs are able to generate identical trees, regardless of intermediate dependency updates.

This file is intended to be committed into source repositories, and serves various purposes:

Describe a single representation of a dependency tree such that teammates, deployments, and continuous integration are guaranteed to install exactly the same dependencies.

Provide a facility for users to "time-travel" to previous states of node_modules without having to commit the directory itself.

Facilitate greater visibility of tree changes through readable source control diffs.

Optimize the installation process by allowing npm to skip repeated metadata resolutions for previously-installed packages.

As of npm v7, lockfiles include enough information to gain a complete picture of the package tree, reducing the need to read package.json files, and allowing for significant performance improvements.

package-lockmust be committed and treated as a first-class citizens. This includes: committing it, solving merge conflicts and handling it with caution.

Packages

The project includes different packages, all of them are representing a dependency that could work in isolation and is deployed on the internal npm registry.

Components

This package works using the SUI Studio playground. It allows us to create new components for the IJ studio namespace and publish them automatically on our internal registry. For more information about how the SUI Studio works, here you can find the documentation.

Create a new component

To create a new component, use the studio:generate NPM script, it will generate a component following the same process you can find in the previously attached documentation.

npm run studio:generate <componentFolder> <componentName>

/*

* e.g

* npm run studio:generate layout candidate

*/Launch component playground

To start working on a component and see how it changes on the playground, use the studio:dev NPM script specifying the component you want to work on, it'll automatically run the playground and you'll be able to access it on http://localhost:3000

npm run studio:dev <componentFolder>/<componentName>

/*

* e.g

* npm run studio:dev layout/candidate

*/Run component tests

While working on the playground you will already see the test suite for the component.

In case you want to check if all the components tests are still passing, you can run them all with the test:studio NPM script. It will immediately run all the tests on the terminal.

npm run test:studio❕ In case you want to watch for file changes while running the tests, use the

test:studio:watchNPM script:

npm run test:studio:watchDomain

This package contains the Web App and Widgets business logic, organized following the clean architecture principles adapted for our frontend needs.

You can read more about it on the Adevinta Spain - Frontend Convergence document, in the Domain section (you need to access with the Okta credentials to see this page).

Create use cases

After reading how we apply clean architecture client-side at Adevinta on the Frontend Convergence document, you should now be able to create your first use case.

Since there is a lot of boilerplate, inside the domain package folder you can use a generate script that will automatically generate most of the files you'll need, check the script file inside the domain/scripts/generate.js file if you're curious about how it works.

cd ./domain

npm run generate language -N -E LanguageRun domain tests

Inside the domain folder you'll be able to use all the scripts defined in the package.json file.

To run the test suites, use the test script to launch the test runner in your terminal:

cd ./domain

npm testLiterals

The literals package folder contains the Web App translations.

You can add new translations creating a new KEY (the newly defined key should be completely upper-case) and associating the text you'll later use inside the app.

In order to correctly deploy the literals with a new release, it needs to pass some tests, so be always sure to respect the following constraints when adding/editing a literal:

It should be inserted alphabetically, don't add the key to the bottom of the file but search for its right spot between the other literals.

Should be present in both es and it files. In case you don't dispose of a translation, just add it as an empty string.

Web App

The Web App represent the website used by job candidates.

Most of the app source code is inside the src folder.

Launch the development environment

In order to work with the app in different environments, into the package.json there are some NPM scripts useful to start the development environment in different ways:

dev: Run the app in development and client-side only mode. It allows using the mock server and test functionalities quickly since it uses HMR to refresh the content as soon as the code is saved.

ssr:dev: Run the app in development and server-side mode. It is useful to verify that the app works correctly while doing SSR and rendering sensible details for SEO purposes, etc..., but since it gets built the development cycle is slower because is necessary to restart the script every time a change is applied.

ssr:local: Run the app as the

ssr:devscript does but in a local (local) environment. This is the right script to use when working on a feature on the Monolith Web App that needs to interact with this project.ssr:sbx: Run the app as the

ssr:devscript does, but in sandbox (sbx) enviroment.ssr:pre: Run the app as the

ssr:devscript does, but in pre-production (pre) enviroment.ssr:pro: Run the app as the

ssr:devscript does, but in production (pro) enviroment.

Choose portal (net/it)

While working in development mode (dev, ssr:dev) it is possible to specify if the Web App should be launched in the Spanish or Italian version of the platform.

By default, the Web App starts with the Spanish version, to switch it to the Italian portal use the previously explained scripts setting the PORTALenv variable:

PORTAL=it npm run devEnable Ads for developement

The easiest way to enable the Ads on a page and correctly render content inside the Roadblock banners is adding the ast_test=true query parameter.

Adding this query parameter to the url of the page under developement, you be able to load content inside the placed banners (TopBanner, SideBanner, etc...) and verify their rendered following the respective sizes.

Render a page WITHOUT ads content

http://localhost:3000/candidate/cv/view/index.xhtml

Render a page WITH ads content

http://localhost:3000/candidate/cv/view/index.xhtml?ast_test=trueRun e2e tests

In order to locally run the e2e tests created using Cypress, it is just simple as using the NPM script test:e2e:dev:

npm run test:e2e:devThis will immediately download the necessary Cypress dependencies (if missing) and initialize the Cypress playground.

There is a similar NPM script defined (test:e2e:ci) that is used during the CI process to assert the same tests.

Mock endpoint response

Deploy

Here you can find some useful instructions on how to deploy the Web App

Release links

https://artifactory.mpi-internal.com/artifactory/webapp/#/artifacts/browse/tree/General/docker-local/scmspain/frontend-ij--web-app

Automatic installation

When a PR is merged from a branch to master then a jenkins job will run automatically.

This job executes acceptance tests and if they finish successfully then you should launch manually the deploy in prod. This step is in the same jenkins job and its named "Promote to prod" (you need to be logged in):

https://jenkins.dev.infojobs.net/view/AWS%20PROD/view/REACT/job/frontend-ij--web-app-pipeline/view/tags/

Manual installation directly to PROD

When travis finish successfully then you have to launch this jobs with default values and Environment option point to "Production".

This option skips acceptance tests and deploy directly in PROD.

https://jenkins.dev.infojobs.net/view/AWS%20PROD/view/REACT/job/frontend-ij--web-app/

Hotfix installation

In case you want to restore an old webapp AMI then you must launch the job for manual installations and fill in the param REACT_AMI_NAME as indicated in the job.

Installation in Sandbox (SBX) environment

Web App can be installed in SBX environment with the following Jenkins job:

https://jenkins.dev.infojobs.net/view/AWS%20PROD2/job/InstAWS_Sbx_WebApp/

This job needs two parameters:

- WEBAPP_AMI_NAME: By default, this parameter has the value

ACTIVE_IN_PROD, it means that the pipeline will get the current version is running in Production environment. If you want to deploy an another version, you should write the preproduction AMI version, for instance:IJReact-201911180851-preproductionIf you want to deploy a specific version of a Pull Request, you need to uncomment from .travis.yml these two stages:

- stage: build preproduction container

- stage: build AMI PRThen a new AMI version will be created with the PR number and the commit id (for instance: IJReact-pr-744-0d97ba8). You should write this AMI version.

- VPC_LABEL: This parameter shouldn't be modified. It is related with the AWS VPC network where the Web App will be installed.

For each new installation of EMPLEO in Sandbox environment, automatically will install the ACTIVE_IN_PROD version of Web App.

Installation in Manual PRE Installation

Web App can be installed in PRE Manual environment with the following Jenkins job:

https://jenkins.dev.infojobs.net/view/Development/view/Installations%20-%20AWS%20Manual/job/EmpleoPREenvironmentInstall/

Deploy a Pre/Pro image to Manual Environment

This job has been adapted in order to deploy Webapp version using the parameter WEBAPP_VERSION, this parameter by default has the value LAST_VERSION.

LAST_VERSION value means that Jenkins will deploy the WEBAPP PREPRODUCTION version of the version installed in PRODUCTION.

If you want to install another version, you should fill the parameter as following example: IJReact-201912121635-preproduction

Deploy a specific PR to a manual environment

In the travis.yml there are two commented septs(L42 to L58). In order to be able to deploy a particular PR in a manual environment the PR has to have this code uncommented at least once to deploy the docker image to the artifactory. Steps to follow: *Code on a branch *Uncomment the steps on the travis.yml file *Push and create a PR *Wait until travis is done *Go to the Jenkins Job and start the build process with the right parameters (WEBAPP-VERSION IJReact-PR-xxx-xxxx) *Comments those steps and push again (have in mind that once you comment thos spets there will be no new image published on artifactory for those commits).

Set up proxy for manual env

You need to have one extension on your browser that allows you to set a proxy. What we are gonna do its to map the request to the manual env DNS Steps: *Go to the console output of the jenkins job for the manual deploy *Search for "Squid DNS" and you will find the DNS for the manual env and the port (3128) *Set up your proxy to map "www.infojobsqaaws.net" to the DNS and port

Dashboards

- Client and Server JS errors:

Services accessible from Candidate´s webapp

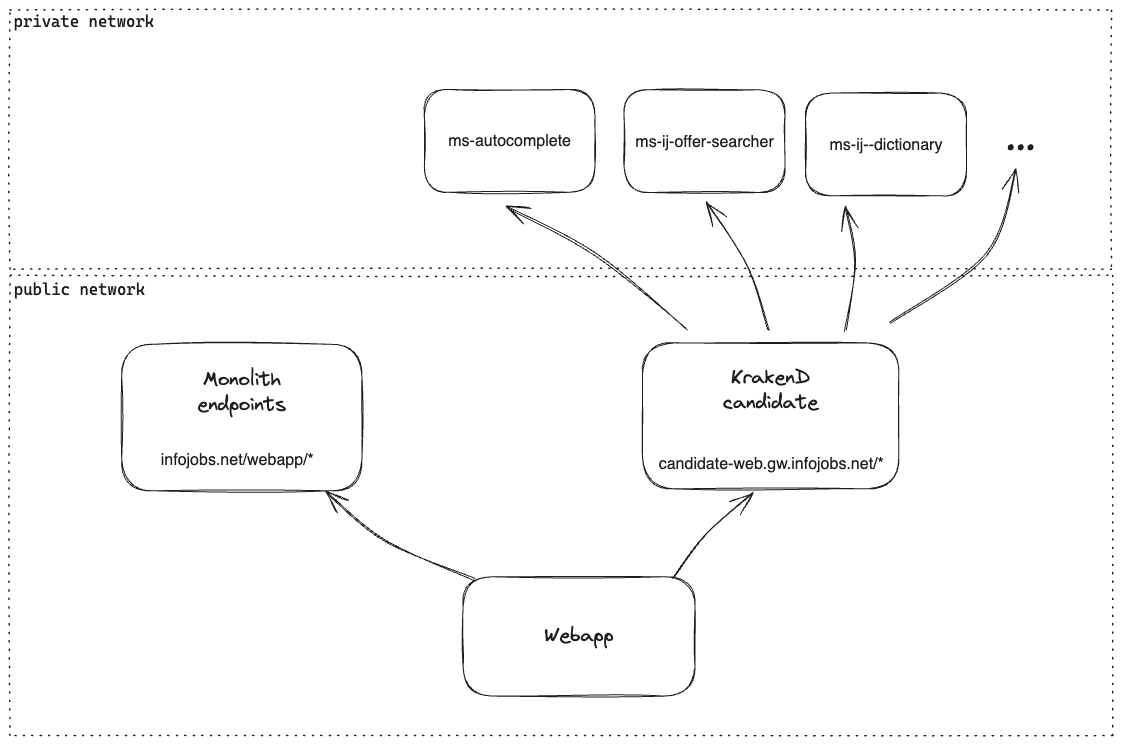

In the following image, we can see the accessible services/endpoints of the candidates' web application:

- Monolith endpoints with cookie-based authentication, starting with infojobs.net/webapp/*

- KrakenD for candidates, which allows access to all defined microservices.

Troubleshooting

Sometimes is possible that the Web App won't correcly run, maybe due to a cached dependencies or a failure looking for another one.

In these cases, try to follow this process to restart with a fresh version of the codebase:

- Checkout the master branch and pull the latest code:

git checkout master git pull - Clean and re-install all the existing dependencies modules and dependencies tree:

This script takes care of removing thenpm run deps:installnode_modulesfolder andpackage-lock.jsonfile, then install the dependencies again from scratch.

In case this approach is not working, contact one the app maintainers to get support.

Performance

Objective: Measure the performance of a web page.

Web Vitals and Core Web Vitals

Web Vitals is an initiative by Google to provide unified guidance for quality signals that are essential to delivering a great user experience on the web.

Core Web Vitals are the subset of Web Vitals that apply to all web pages, should be measured by all site owners, and will be surfaced across all Google tools.

Each of the Core Web Vitals represents a distinct facet of the user experience, is measurable in the field, and reflects the real-world experience of a critical user-centric outcome.

The metrics that make up Core Web Vitals will evolve over time. The current set for 2020 focuses on three aspects of the user experience—loading, interactivity, and visual stability—and includes the following metrics (and their respective thresholds):

- Largest Contentful Paint (LCP): measures loading performance. To provide a good user experience, LCP should occur within 2.5 seconds of when the page first starts loading.

- First Input Delay (FID): measures interactivity. To provide a good user experience, pages should have a FID of 100 milliseconds or less.

- Cumulative Layout Shift (CLS): measures visual stability. To provide a good user experience, pages should maintain a CLS of 0.1. or less.

How are metrics measured?

Performance metrics are generally measured in one of two ways:

- In the lab: using tools to simulate a page load in a consistent, controlled environment

- In the field: on real users actually loading and interacting with the page

There is plenty of ways to do this, but we are currently using two main ways: PageSpeed Insights API and Search Console's Core Web Vitals report. Both are metrics based on real users, so they are in the field category.

Read more: User-centric performance metrics

PageSpeed Insights

English version: https://developers.google.com/speed/docs/insights/v5/about#differences

- In the field or in the lab, depending on Google page data.

Real-user experience data in PSI is powered by the Chrome User Experience Report (CrUX) dataset. PSI reports real users' First Contentful Paint (FCP), First Input Delay (FID), Largest Contentful Paint (LCP), and Cumulative Layout Shift (CLS) experiences over the previous 28-day collection period. PSI also reports experiences for experimental metrics Interaction to Next Paint (INP) and Time to First Byte (TTFB).

In order to show user experience data for a given page, there must be sufficient data for it to be included in the CrUX dataset. A page might not have sufficient data if it has been recently published or has too few samples from real users. When this happens, PSI will fall back to origin-level granularity, which encompasses all user experiences on all pages of the website. Sometimes the origin may also have insufficient data, in which case PSI will be unable to show any real-user experience data.PageSpeed Insights Website

- Get the results directly from the PageSpeed website

- For example: https://pagespeed.web.dev/report?url=https%3A%2F%2Fwww.infojobs.net%2Fjobsearch%2Fsearch-results%2Flist.xhtml&form_factor=desktop

PageSpeed Insights Datadog Integration

How it works?

- There is one repository that defined the pages and the strategy (mobile or desktop) that we want to measure.

- It uses PageSpeed Insights API to get data.

- It sends the data to Datadog.

- Datadog Dashboards with Synthetic Lighthouse Metrics in mobile:

Search Console's Core Web Vitals report

- It is available inside Search Console