dumpster-dive

v5.6.3

Published

get a wikipedia dump parsed into mongodb

Downloads

90

Readme

dumpster-dive is a node script that puts a highly-queryable wikipedia on your computer in a nice afternoon.

It uses worker-nodes to process pages in parallel, and wtf_wikipedia to turn wikiscript into any json.

this library writes to a database,

if you'd like to simply write files to the filesystem, use dumpster-dip instead.

npm install -g dumpster-dive😎 API

var dumpster = require('dumpster-dive');

dumpster({ file: './enwiki-latest-pages-articles.xml', db: 'enwiki' }, callback);Command-Line:

dumpster /path/to/my-wikipedia-article-dump.xml --citations=false --images=falsethen check em out in mongo:

$ mongo #enter the mongo shell

use enwiki #grab the database

db.pages.count()

# 4,926,056...

db.pages.find({title:"Toronto"})[0].categories

#[ "Former colonial capitals in Canada",

# "Populated places established in 1793" ...]Steps:

1️⃣ you can do this.

you can do this. just a few Gb. you can do this.

2️⃣ get ready

Install nodejs (at least v6), mongodb (at least v3)

# install this script

npm install -g dumpster-dive # (that gives you the global command `dumpster`)

# start mongo up

mongod --config /mypath/to/mongod.conf3️⃣ download a wikipedia

The Afrikaans wikipedia (around 93,000 articles) only takes a few minutes to download, and 5 mins to load into mongo on a macbook:

# download an xml dump (38mb, couple minutes)

wget https://dumps.wikimedia.org/afwiki/latest/afwiki-latest-pages-articles.xml.bz2the english dump is 16Gb. The download page is confusing, but you'll want this file:

for example, the English version is:

wget https://dumps.wikimedia.org/enwiki/latest/enwiki-latest-pages-articles.xml.bz24️⃣ unzip it

i know, this sucks. but it makes the parser so much faster.

bzip2 -d ./afwiki-latest-pages-articles.xml.bz2On a macbook, unzipping en-wikipedia takes an hour or so. This is the most-boring part. Eat some lunch.

The english wikipedia is around 60Gb.

5️⃣ OK, start it off

#load it into mongo (10-15 minutes)

dumpster ./afwiki-latest-pages-articles.xml6️⃣ take a bath

just put some epsom salts in there, it feels great.

The en-wiki dump should take a few hours. Maybe 8. Maybe 4. Have a snack prepared.

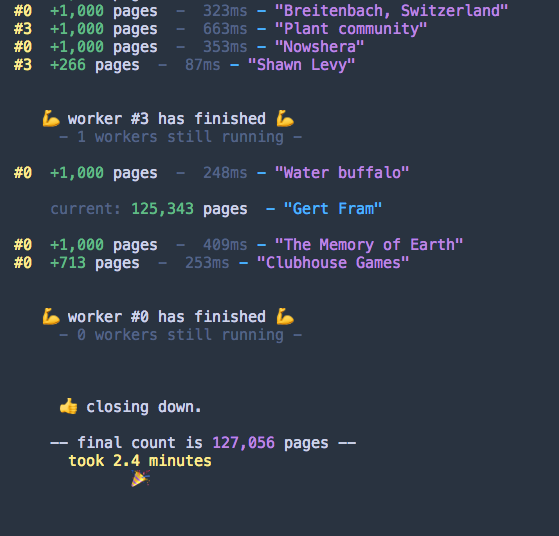

The console will update you every couple seconds to let you know where it's at.

7️⃣ done!

hey, go check-out your data - hit-up the mongo console:

$ mongo

use afwiki //your db name

//show a random page

db.pages.find().skip(200).limit(2)

//find a specific page

db.pages.findOne({title:"Toronto"}).categories

//find the last page

db.pages.find().sort({$natural:-1}).limit(1)

// all the governors of Kentucky

db.pages.count({ categories : { $eq : "Governors of Kentucky" }}

//pages without images

db.pages.count({ images: {$size: 0} })alternatively, you can run dumpster-report afwiki to see a quick spot-check of the records it has created across the database.

Same for the English wikipedia:

the english wikipedia will work under the same process, but the download will take an afternoon, and the loading/parsing a couple hours. The en wikipedia dump is a 13 GB (for enwiki-20170901-pages-articles.xml.bz2), and becomes a pretty legit mongo collection uncompressed. It's something like 51GB, but mongo can do it 💪.

Options:

dumpster follows all the conventions of wtf_wikipedia, and you can pass-in any fields for it to include in it's json.

- human-readable plaintext --plaintext

dumpster({ file: './myfile.xml.bz2', db: 'enwiki', plaintext: true, categories: false });

/*

[{

_id:'Toronto',

title:'Toronto',

plaintext:'Toronto is the most populous city in Canada and the provincial capital...'

}]

*/- disambiguation pages / redirects --skip_disambig, --skip_redirects by default, dumpster skips entries in the dump that aren't full-on articles, you can

let obj = {

file: './path/enwiki-latest-pages-articles.xml.bz2',

db: 'enwiki',

skip_redirects: false,

skip_disambig: false

};

dumpster(obj, () => console.log('done!'));- reducing file-size: you can tell wtf_wikipedia what you want it to parse, and which data you don't need:

dumpster ./my-wiki-dump.xml --infoboxes=false --citations=false --categories=false --links=false- custom json formatting

you can grab whatever data you want, by passing-in a

customfunction. It takes a wtf_wikipediaDocobject, and you can return your cool data:

let obj = {

file: path,

db: dbName,

custom: function (doc) {

return {

_id: doc.title(), //for duplicate-detection

title: doc.title(), //for the logger..

sections: doc.sections().map((i) => i.json({ encode: true })),

categories: doc.categories() //whatever you want!

};

}

};

dumpster(obj, () => console.log('custom wikipedia!'));if you're using any .json() methods, pass a {encode:true} in to avoid mongo complaints about key-names.

non-main namespaces: do you want to parse all the navboxes? change

namespacein ./config.js to another numberremote db: if your databse is non-local, or requires authentication, set it like this:

dumpster({ db_url: 'mongodb://username:password@localhost:27017/' }, () => console.log('done!'));how it works:

this library uses:

- sunday-driver to stream the gnarly xml file

- wtf_wikipedia to brute-parse the article wikiscript contents into JSON.

Addendum:

_ids

since wikimedia makes all pages have globally unique titles, we also use them for the mongo _id fields.

The benefit is that if it crashes half-way through, or if you want to run it again, running this script repeatedly will not multiply your data. We do a 'upsert' on the record.

encoding special characters

mongo has some opinions on special-characters in some of its data. It is weird, but we're using this standard(ish) form of encoding them:

\ --> \\

$ --> \u0024

. --> \u002eNon-wikipedias

This library should also work on other wikis with standard xml dumps from MediaWiki (except wikidata!). I haven't tested them, but the wtf_wikipedia supports all sorts of non-standard wiktionary/wikivoyage templates, and if you can get a bz-compressed xml dump from your wiki, this should work fine. Open an issue if you find something weird.

did it break?

if the script trips at a specific spot, it's helpful to know the article it breaks on, by setting verbose:true:

dumpster({

file: '/path/to/file.xml',

verbose: true

});this prints out every page's title while processing it..

16mb limit?

To go faster, this library writes a ton of articles at a time (default 800). Mongo has a 16mb limit on writes, so if you're adding a bunch of data, like latex, or html, it may make sense to turn this down.

dumpster --batch_size=100that should do the trick.

PRs welcome!

This is an important project, come help us out.