@stable-canvas/comfyui-client

v1.4.1

Published

api Client for ComfyUI that supports both NodeJS and Browser environments. It provides full support for all RESTful / WebSocket APIs.

Downloads

582

Maintainers

Readme

@stable-canvas/comfyui-client

Javascript api Client for ComfyUI that supports both NodeJS and Browser environments.

This client provides comprehensive support for all available RESTful and WebSocket APIs, with built-in TypeScript typings for enhanced development experience. Additionally, it introduces a programmable workflow interface, making it easy to create and manage workflows in a human-readable format.

documentations:

examples:

Features

- Environment Compatibility: Seamlessly functions in both NodeJS and Browser environments.

- Comprehensive API Support: Provides full support for all available RESTful and WebSocket APIs.

- TypeScript Typings: Comes with built-in TypeScript support for type safety and better development experience.

- Programmable Workflows: Introduces a human-readable and highly customizable workflow interface inspired by this issue and this library.

- Ease of Use: Both implementation and usage are designed to be intuitive and user-friendly.

- Zero Dependencies: This library is designed to minimize the introduction of external dependencies and is currently dependency-free.

Installation

Use npm or yarn to install the @stable-canvas/comfyui-client package.

pnpm add @stable-canvas/comfyui-clientDependencies (optional)

To fully utilize the features of this library, your ComfyUI installation needs to support custom nodes for external extensions.

These nodes are used to accept base64 encoded input and forward WebSocket requests.

Note: If you don't need functionalities like

returning images via WebSocket,image to image, orinpainting, you don't need to install these nodes.

- comfyui-tooling-nodes: A crucial extension that provides the ability to handle base64 API transmissions;

image to imageandinpaintingfunctionalities depend on it. - efficiency-nodes-comfyui: This library provides a convenient LoRA manager, which is required in the

src/pipeline/efficientmodule.

Browser Polyfill (optional)

If you plan to use the pipeline feature in a browser environment, you'll need to install the following dependencies and convert File/Image types to Buffer types.

- Buffer: buffer polyfill for browser

- blob-to-buffer: convert blob to buffer

Client Usage

First, import the Client class from the package.

import { Client } from "@stable-canvas/comfyui-client";Client instance, in Browser

const client = new Client({

api_host: "127.0.0.1:8188",

})

// connect ws client

client.connect();Client instance, in NodeJs

import WebSocket from "ws";

import fetch from "node-fetch";

const client = new Client({

//...

WebSocket,

fetch,

});

// connect ws client

client.connect();Advanced functions

In addition to the standard API interfaces provided by comfyui, this library also wraps them to provide advanced calls

enqueue

const result = await client.enqueue(

{ /* workflow prompt */ },

{

progress: ({max,value}) => console.log(`progress: ${value}/${max}`);

}

);It's very simple; it includes the entire prompt interface life cycle and waits for and collectively returns the result at the end of the request

In some cases you might not want to use ws, then you can use

enqueue_polling, this function will perform similar behavior toenqueue, but uses rest http to poll the task status

Get XXX

Sometimes you may need to check some configurations of ComfyUI, such as whether a deployment service contains the needed model or lora, then these interfaces will be useful

getSamplers getSchedulers getSDModels getCNetModels getUpscaleModels getHyperNetworks getLoRAs getVAEs

Pipeline Usage

The pipeline is a simple DSL implementation in this library that allows for easy creation of simple workflows and immediate results.

Text to Image

import { BasePipe } from "@stable-canvas/comfyui-client";

new BasePipe()

.with(/* client instance */)

.model("sdxl.safetensors")

.prompt("A beautiful sunset over the mountains")

.negative("Low quality, blurry")

.size(1024, 768)

.steps(35)

.cfg(5)

.save()

.wait() // call wait() to start the promise chain

.then(({ images }) => {

// process images array

})

.finally(() => client.close());Image to Image with Task Status Monitoring

import { BasePipe } from "@stable-canvas/comfyui-client";

import fs from 'fs';

new BasePipe()

.with(/* client instance */)

.model("sdxl.safetensors")

.image(

fs.readFileSync("path-to-your-input-image.jpg")

)

// .mask(maskImage) // call mask() to add a mask

.prompt("A husky with pearls, oil painting style")

.negative("Low quality, blurry")

.size(640, 960)

.steps(35)

.cfg(7)

.denoise(0.5)

.save()

.on("progress", console.log)

.wait()

.then(({ images }) => {

// Process generated images

})

.finally(() => client.close());LoRAs and CNets with Efficient

EfficientPipe is based on the Efficient series nodes provided by efficiency-nodes-comfyui to expand lora/cnet features.

import { EfficientPipe } from "@stable-canvas/comfyui-client";

const input_image = /* image data Buffer() */;

const { images } = await new EfficientPipe()

.with(client)

.model("sd15.safetensors")

.seed()

.image(input_image)

.prompt("furry, A husky girl with pearls, oil painting style")

.negative("low quality, blurry")

.size(640, 960)

.steps(35)

.cfg(5)

.denoise(0.6)

.cnet("control_v11p_sd15_openpose.pth", input_image)

.lora("LowRA.safetensors")

.lora("add_detail.safetensors")

.save()

.wait();more examples

For complete example code, refer to the files ./examples/nodejs/src/main-pipe-*.ts in the project repository.

Workflow Usage

Programmable/Human-readable pattern

Inspired by this issue and this library, this library provides a programmable workflow interface.

It has the following use cases:

- Interactive GUI Integration: Offers support for seamless integration with ComfyUI's new GUI, enhancing user interaction possibilities.

- LLMs for Workflow Generation: Leverages the ability of large language models to understand Javascript for creating workflows.

- Cross-Project Workflow Reuse: Enables the sharing and repurposing of workflow components across different projects using ComfyUI.

- Custom Node Creation: Assists in developing and integrating custom nodes into existing workflows for expanded functionality.

- Workflow Visualization: Facilitates a clearer understanding of workflows by translating them into a visual format suitable for ComfyUI's GUI.

- Model Research and Development: Provides a framework for leveraging ComfyUI nodes in machine learning research without execution capabilities.

- Script-Driven Workflow Templates: Enables the generation of templated workflows through scripting for consistent and efficient project setups.

- Web UI-independent Workflow Deployment: Enables the creation and deployment of workflows without reliance on a web-based user interface.

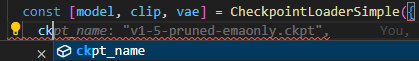

Minimal case

Here is a minimal example demonstrating how to create and execute a simple workflow using this library.

import { Workflow } from "@stable-canvas/comfyui-client";

const workflow = new Workflow();

const cls = workflow.classes;

const [model, clip, vae] = cls.CheckpointLoaderSimple({

ckpt_name: "sdxl.safetensors",

});

const enc = (text: string) => cls.CLIPTextEncode({ text, clip })[0];

const [samples] = cls.KSampler({

seed: Math.floor(Math.random() * 2 ** 32),

steps: 35,

cfg: 4,

sampler_name: "dpmpp_2m_sde_gpu",

scheduler: "karras",

denoise: 1,

model,

positive: enc("best quality, 1girl"),

negative: enc(

"worst quality, bad anatomy, embedding:NG_DeepNegative_V1_75T"

),

latent_image: cls.EmptyLatentImage({

width: 512,

height: 512,

batch_size: 1,

})[0],

});

cls.SaveImage({

filename_prefix: "from-sc-comfy-ui-client",

images: cls.VAEDecode({ samples, vae })[0],

});

console.log(

// This call will return a JSON object that can be used for ComfyUI API calls, primarily for demonstration or debugging purposes

workflow.workflow()

)Programable real world case

Both implementation and usage are extremely simple and human-readable. This programmable approach allows for dynamic workflow creation, enabling loops, conditionals, and reusable functions. It's particularly useful for batch processing, experimenting with different models or prompts, and creating more complex, flexible workflows.

This specific workflow demonstrates the generation of multiple images using two different AI models ("lofi_v5" and "case-h-beta") and four different dress styles. It showcases how to create a reusable image generation pipeline and apply it across various prompts and models efficiently.

Below is a simple example of creating a workflow:

const createWorkflow = () => {

const workflow = new Workflow();

const {

KSampler,

CheckpointLoaderSimple,

EmptyLatentImage,

CLIPTextEncode,

VAEDecode,

SaveImage,

NODE1,

} = workflow.classes;

const width = 640;

const height = 960;

const batch_size = 1;

const seed = Math.floor(Math.random() * 2 ** 32);

const pos = "best quality, 1girl";

const neg = "worst quality, bad anatomy, embedding:NG_DeepNegative_V1_75T";

const model1_name = "lofi_v5.baked.fp16.safetensors";

const model2_name = "case-h-beta.baked.fp16.safetensors";

const sampler_settings = {

seed,

steps: 35,

cfg: 4,

sampler_name: "dpmpp_2m_sde_gpu",

scheduler: "karras",

denoise: 1,

};

const [model1, clip1, vae1] = CheckpointLoaderSimple({

ckpt_name: model1_name,

});

const [model2, clip2, vae2] = CheckpointLoaderSimple({

ckpt_name: model2_name,

});

const dress_case = [

"white yoga",

"black office",

"pink sportswear",

"cosplay",

];

const generate = (model, clip, vae, pos, neg) => {

const [latent_image] = EmptyLatentImage({

width,

height,

batch_size,

});

const [positive] = CLIPTextEncode({ text: pos, clip });

const [negative] = CLIPTextEncode({ text: neg, clip });

const [samples] = KSampler({

...sampler_settings,

model,

positive,

negative,

latent_image,

});

const [image] = VAEDecode({ samples, vae });

return image;

};

const save = (image, filename_prefix) =>

SaveImage({

images: image,

filename_prefix,

});

for (const cloth of dress_case) {

const input_pos1 = `${pos}, ${cloth} dress`;

const image1 = generate(model1, clip1, vae1, input_pos1, neg);

const input_pos2 = `${pos}, ${cloth} dress`;

const image2 = generate(model2, clip2, vae2, input_pos2, neg);

save(image1, `${cloth}-lofi-v5`);

save(image2, `${cloth}-case-h-beta`);

}

return workflow;

};Yes, you read that right—we will repeatedly create some nodes, which might seem counterintuitive, but it's perfectly fine because the workflow will not be rendered within the ComfyUI server. Instead, it will execute each node sequentially, so even if creating a workflow with thousands of nodes is executable and correct.

Notably, you can also leverage all your JavaScript programming knowledge, such as recursion, functional programming, currying, etc. For example, you can do something like this:

const generator = model => clip => vae => pos => neg => {

const [latent_image] = EmptyLatentImage({

width,

height,

batch_size,

});

const [positive] = CLIPTextEncode({ text: pos, clip });

const [negative] = CLIPTextEncode({ text: neg, clip });

const [samples] = KSampler({

...sampler_settings,

model,

positive,

negative,

latent_image,

});

const [image] = VAEDecode({ samples, vae });

return image;

};

const model1_gen = generator(model1)(clip1)(vae1);Invoke workflow

const wf1 = createWorkflow();

const result = await wf1.invoke(client);Q: What is the relationship between workflow and client? ?

A: If you need to refer to complete example code, you can check out the ./examples/nodejs/main*.ts entry files. These contain fully executable code examples ranging from the simplest to slightly more complex workflows.

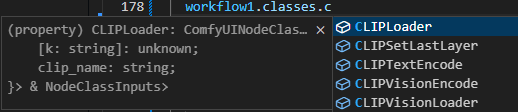

type support

- builtin node types

- builtin node params

- any other node

InvokedWorkflow

If you need to manage the life cycle of your request, then this class can be very convenient

instance

// You can instantiate manually

const invoked = new InvokedWorkflow({ /* workflow payload */ }, client);

// or use the workflow api to instantiate

const invoked = your_workflow.instance();running

// job enqueue

await invoked.enqueue();

// job result promise

const job_promise = invoked.wait();

// if you want interrupt it

invoked.interrupt();

// query job status

invoked.query();Client Plugin

We provide Plugin to expand Client capabilities.

simple example

import { Plugin } from "@stable-canvas/comfy"

export class LoggingPlugin extends Plugin {

constructor() {

super();

this.addHook({

type: "function",

name: "fetch",

fn: async (original, ...args) => {

console.log("fetch args", args);

const resp = await original(...args);

console.log("fetch resp", resp);

return resp;

},

});

}

}ComfyUI Login Auth

For example, sometimes you may need to provide node authentication capabilities, and you may have many solutions to implement your ComfyUI permission management

If you use the ComfyUI-Login extension, you can use the built-in plugins.LoginAuthPlugin to configure the Client to support authentication

import { Client, plugins } from "@stable-canvas/comfyui-client";

const client = /* client instance */;

client.use(

new plugins.LoginAuthPlugin({

token: "MY_TOP_SECRET"

})

);Events

Subscribing to Custom Events

If your ComfyUI instance emits custom WebSocket events, you can subscribe to them as follows:

client.events.on('your_custom_event_type', (data) => {

// 'data' contains the event payload object

console.log('Received custom event:', data);

});Handling Unsubscribed Events

To capture and process events that haven't been explicitly subscribed to, use the unhandled event listener:

client.events.on('unhandled', ({ type, data }) => {

// 'type' is the event type

// 'data' is the event payload object

console.log(`Received unhandled event of type '${type}':`, data);

});Handling All Event Messages

Register the message event to subscribe to all WebSocket messages pushed from ComfyUI

client.events.on('message', (event) => {

if (typeof event.data !== 'string') return;

const { type, data } = JSON.parse(event.data);

// ...

});Handling Workflow Events

If you've created a workflow instance, you can also subscribe to events emitted by that instance:

const invoked_wk = workflow.instance(client);

const invoked_wk = /* or new InvokedWorkflow() */;

invoked_wk.on("execution_interrupted", () => {

console.log("Workflow execution interrupted");

});Custom Resolver

Sometimes, you might use ComfyUI to generate non-image outputs, such as music, text, object detection results, etc. In these cases, you'll need a custom resolver, as the default behavior of this library primarily focuses on handling image generation.

Here's how to define a custom resolver:

Suppose you have a final output node is custom non-image node, and its output might be

{ "result": "hi, I'm phi3" }.

const resolver = (resp, node_output, ctx) => {

// const { client, prompt_id, node_id } = ctx;

return {

...resp,

data: resp.data ?? node_output.result,

};

};You can then use this resolver with client.enqueue:

const prompt = { ... };

const resp = await client.enqueue<string>(prompt, { resolver });

console.log(resp.data); // "hi, I'm phi3"You can also apply the same resolver to a workflow using workflow.invoke:

const workflow = /* class Workflow */;

const result = await workflow.invoke(client, { resolver });

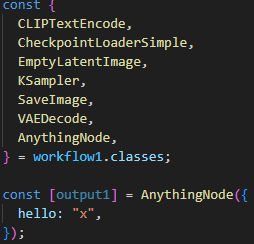

console.log(result.data); // "hi, I'm phi3"Handling Non-Standard Node Names

ComfyUI does not enforce strict naming conventions for nodes, which can lead to custom nodes with names containing spaces or special characters. These names, such as Efficient Loader, DSINE-NormalMapPreprocessor, or Robust Video Matting, are challenging to use directly as variable names in code.

To address this issue, we provide the workflow.node interface. This interface allows you to create nodes using string-based names, regardless of whether they conform to standard variable naming rules.

const workflow = new Workflow();

// ❌Incorrect: Cannot destructure directly

// const { Efficient Loader } = workflow.classes;

// ✅Correct: Use the workflow.node method to create nodes

const [output1, output2] = workflow.node("Efficient Loader", {

// Node parameters

});CLI

install

npm install @stable-canvas/comfyui-client-cliWorkflow to code

This tool converts the input workflow into executable code that uses this library.

Usage: nodejs-comfy-ui-client-code-gen [options]

Use this tool to generate the corresponding calling code using workflow

Options:

-V, --version output the version number

-t, --template [template] Specify the template for generating code, builtin tpl: [esm,cjs,web,none]

(default: "esm")

-o, --out [output] Specify the output file for the generated code. default to stdout

-i, --in <input> Specify the input file, support .json/.png file

-h, --help display help for commandexample: api.json to code

cuc-w2c -i ./tests/test-inputs/workflow_api.json -t noneinput file: ./cli/tests/test-inputs/workflow_api.json

const [LATENT_2] = cls.EmptyLatentImage({

width: 512,

height: 512,

batch_size: 1,

});

const [MODEL_1, CLIP_1, VAE_1] = cls.CheckpointLoaderSimple({

ckpt_name: "EPIC-la-v1.ckpt",

});

const [CONDITIONING_2] = cls.CLIPTextEncode({

text: "text, watermark",

clip: CLIP_1,

});

const [CONDITIONING_1] = cls.CLIPTextEncode({

text: "beautiful scenery nature glass bottle landscape, , purple galaxy bottle,",

clip: CLIP_1,

});

const [LATENT_1] = cls.KSampler({

seed: 156680208700286,

steps: 20,

cfg: 8,

sampler_name: "euler",

scheduler: "normal",

denoise: 1,

model: MODEL_1,

positive: CONDITIONING_1,

negative: CONDITIONING_2,

latent_image: LATENT_2,

});

const [IMAGE_1] = cls.VAEDecode({

samples: LATENT_1,

vae: VAE_1,

});

const [] = cls.SaveImage({

filename_prefix: "ComfyUI",

images: IMAGE_1,

});example: export-png to code

cuc-w2c -i ./tests/test-inputs/workflow-min.png -t noneinput file: ./cli/tests/test-inputs/workflow-min.png

const [MODEL_1, CLIP_1, VAE_1] = cls.CheckpointLoaderSimple({

ckpt_name: "EPIC-la-v1.ckpt",

});

const [CONDITIONING_2] = cls.CLIPTextEncode({

text: "beautiful scenery nature glass bottle landscape, , purple galaxy bottle,",

clip: CLIP_1,

});

const [CONDITIONING_1] = cls.CLIPTextEncode({

text: "text, watermark",

clip: CLIP_1,

});

const [LATENT_1] = cls.EmptyLatentImage({

width: 512,

height: 512,

batch_size: 1,

});

const [LATENT_2] = cls.KSampler({

seed: 156680208700286,

control_after_generate: "randomize",

steps: 20,

cfg: 8,

sampler_name: "euler",

scheduler: "normal",

denoise: 1,

model: MODEL_1,

positive: CONDITIONING_2,

negative: CONDITIONING_1,

latent_image: LATENT_1,

});

const [IMAGE_1] = cls.VAEDecode({

samples: LATENT_2,

vae: VAE_1,

});

const [] = cls.SaveImage({

filename_prefix: "ComfyUI",

images: IMAGE_1,

});Since the order of widgets may change at any time, the function from .png to code may be unstable. It is recommended to use .json to code

Roadmap

- [x] workflow to code: Transpiler workflow to code

- [x] .json => code

- [x] .png => code

- [ ] code to workflow: Output a json file that can be imported into the web front end

- [ ] Output type hints

Contributing

Contributions are welcome! Please feel free to submit a pull request.

Building

# Prior to the first build: `npm run build-types` to generate types for Comfy nodes.

npm run build-types

npm run buildLicense

MIT