@directus-labs/ai-image-moderation-operation

v1.0.1

Published

Use Clarifai's Moderation Recognition Model to analyze image safety.

Downloads

72

Readme

AI Image Moderation Operation

Analyze images for drugs, suggestive or explicit material, powered by Clarifai.

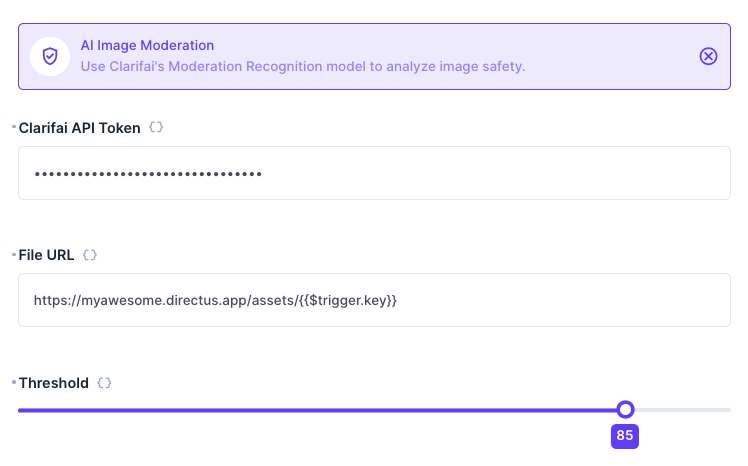

This operation contains four configuration options - a Clarifai API Key, a link to a file, and a threshold percentage for the concepts to be 'flagged'. It returns a JSON object containing a score for each concept, and an array of which concepts are over the threshold.

Output

This operation outputs a JSON object with the following structure:

{

"concepts": [

{

"name": "drug",

"value": "99.99"

},

{

"name": "suggestive",

"value": "0.00"

},

{

"name": "gore",

"value": "0.00"

},

{

"name": "explicit",

"value": "0.00"

}

],

"flags": [

"drug"

]

}Flow Setup

Automatically Moderate New Files

Create a Flow with an Event Hook action trigger and a scope of files.upload. Use the AI Image Moderation operation, setting the File URL to https://your-directus-project-url/assets/{{ $trigger.key }}, being sure to provide your specific Directus Project URL.

This will work if your file is public, but if it isn't, you can append ?access_token=token to the File URL, replacing the value with a valid user token that has access to the file.

This operation will trigger on every new file upload, regardless of location or filetype. You may wish to add a conditional step between the trigger and moderation operation. The following condition rule will check that the file is an image:

{

"$trigger": {

"payload": {

"type": {

"_contains": "image"

}

}

}

}