@aicore/analytics-parser

v1.0.1

Published

parse analytics dumps to json/csv/other formats

Downloads

7

Readme

analyticsParser

A module that can transform raw analytics dump files.

Code Guardian

Getting Started

Install this library

npm install @aicore/analytics-parserImport the library

import {parseGZIP} from '@aicore/analytics-parser';Get the analytics logs

The analytics logs will be structured in the storage bucket as follows:

- Each analytics app will have a root folder under which the analytics data is collected. (Eg.

brackets-prod). - Within each app folder, the raw analytics dump files can be located easily with the date.

Eg.

brackets-prod/2022/10/11/*will have all analytics data for that day. - Download the analytics gzip files for the dates that you desire. https://cyberduck.io/ is a good utility for this in windows and mac.

Parse the extracted zip file

To parse the GZipped analytics dump file using the parseGZIP API:

// Give the gzip input file path. Note that the file name should be

// exactly of the form `brackets-prod.2022-11-30-9-13-17-656.v1.json.tar.gz` containing a single file

// `brackets-prod.2022-11-30-9-13-17-656.v1.json`.

let expandedJSON = await parseGZIP('path/to/brackets-prod.2022-11-30-9-13-17-656.v1.json.tar.gz');Understanding return data type of parseGZIP API

The returned expandedJSON object is an array of event point objects as below.

Each event point object has the following fields:

- (

type,category,subCategory): These three strings identifies the event and are guaranteed to be present. Eg.(type: platform, category: startup, subCategory: time),(type: platform, category: language, subCategory: en-us),(type: UI, category: click, subCategory: closeBtn) uuid: Unique user ID that persists across sessions.sessionID: A session ID that gets reset on every session. For Eg. Browser Tab close resetssessionID.clientTimeUTC: A unix timestamp signalling the exact time(accurate to 3 seconds) at which the said event occurred according to the clients clock. This is the preferred time to use for the event. Note that the client clock may be wrong or misleading as this is client specified data. So cross-reference it to be within 30 minutes ofserverTimeUTC.serverTimeUTC: A unix timestamp signalling the exact time(accurate to within 10 minutes) at which the said event occurred according to the servers clock. Use this only to cross-reference withclientTimeUTC.count: The number of times the event occurred in the time. Guaranteed to be present.value: Value is an optional string usually representing a number. if present, thecountspecified the number of times thevaluehappened. This is only present in certain events that tracks values. Eg. If we are trackingJS file openlatencies,(value: 250, count 2)means that we got 2JS file openevents each with latency of 250 units.geoLocation: Of the user raising the event.

[{

"type": "usage",

"category": "languageServerProtocol",

"subCategory": "codeHintsphp",

"count": 1,

"value": "250", // value is optional, if present, the count specified the number of times the value happened.

"geoLocation": {

"city": "Gurugram (Sector 44)",

"continent": "Asia",

"country": "India",

"isInEuropeanUnion": false

},

"sessionID": "cmn92zuk0i",

"clientTimeUTC": 1669799589768,

"serverTimeUTC": 1669799580000,

"uuid": "208c5676-746f-4493-80ed-d919775a2f1d"

},...]The Analytics Zip file

The analytics zip file name is of the format brackets-prod.YYYY-MM-DD-H-M-S-ms.v1.json.tar.gz. It has a single JSON

file when extracted with name of form brackets-prod.YYYY-MM-DD-H-M-S-ms.v1.json(referred here on as extracted JSON).

The first part of the name contains the app name(Eg. brackets-prod) for which the dump corresponds to and the

second part is the timestamp(accurate to milliseconds) at which the dump was collected at the server.

To learn more about the raw extracted JSON format, see this wiki.

But knowing the raw format is not necessary for this library. The purpose of this library is to convert this raw JSON to

a much more human-readable JSON format via the parseGZIP API outlined below.

Detailed API docs

See this link for detailed API docs.

Commands available

Building

Since this is a pure JS template project, build command just runs test with coverage.

> npm install // do this only once.

> npm run buildLinting

To lint the files in the project, run the following command:

> npm run lintTo Automatically fix lint errors:

> npm run lint:fixTesting

To run all tests:

> npm run test

Hello world Tests

✔ should return Hello World

#indexOf()

✔ should return -1 when the value is not presentAdditionally, to run unit/integration tests only, use the commands:

> npm run test:unit

> npm run test:integCoverage Reports

To run all tests with coverage:

> npm run cover

Hello world Tests

✔ should return Hello World

#indexOf()

✔ should return -1 when the value is not present

2 passing (6ms)

----------|---------|----------|---------|---------|-------------------

File | % Stmts | % Branch | % Funcs | % Lines | Uncovered Line #s

----------|---------|----------|---------|---------|-------------------

All files | 100 | 100 | 100 | 100 |

index.js | 100 | 100 | 100 | 100 |

----------|---------|----------|---------|---------|-------------------

=============================== Coverage summary ===============================

Statements : 100% ( 5/5 )

Branches : 100% ( 2/2 )

Functions : 100% ( 1/1 )

Lines : 100% ( 5/5 )

================================================================================

Detailed unit test coverage report: file:///template-nodejs/coverage-unit/index.html

Detailed integration test coverage report: file:///template-nodejs/coverage-integration/index.htmlAfter running coverage, detailed reports can be found in the coverage folder listed in the output of coverage command. Open the file in browser to view detailed reports.

To run unit/integration tests only with coverage

> npm run cover:unit

> npm run cover:integSample coverage report:

Unit and Integration coverage configs

Unit and integration test coverage settings can be updated by configs .nycrc.unit.json and .nycrc.integration.json.

See https://github.com/istanbuljs/nyc for config options.

Publishing packages to NPM

Preparing for release

Please run npm run release on the main branch and push the changes to main. The release command will bump the npm version.

!NB: NPM publish will faill if there is another release with the same version.

Publishing

To publish a package to npm, push contents to npm branch in

this repository.

Publishing @aicore/package*

If you are looking to publish to package owned by core.ai, you will need access to the GitHub Organization secret NPM_TOKEN.

For repos managed by aicore org in GitHub, Please contact your Admin to get access to core.ai's NPM tokens.

Publishing to your own npm account

Alternatively, if you want to publish the package to your own npm account, please follow these docs:

- Create an automation access token by following this link.

- Add NPM_TOKEN to your repository secret by following this link

To edit the publishing workflow, please see file: .github/workflows/npm-publish.yml

Dependency updates

We use Rennovate for dependency updates: https://blog.logrocket.com/renovate-dependency-updates-on-steroids/

- By default, dep updates happen on sunday every week.

- The status of dependency updates can be viewed here if you have this repo permissions in github: https://app.renovatebot.com/dashboard#github/aicore/template-nodejs

- To edit rennovate options, edit the rennovate.json file in root, see https://docs.renovatebot.com/configuration-options/ Refer

Code Guardian

Several automated workflows that check code integrity are integrated into this template. These include:

- GitHub actions that runs build/test/coverage flows when a contributor raises a pull request

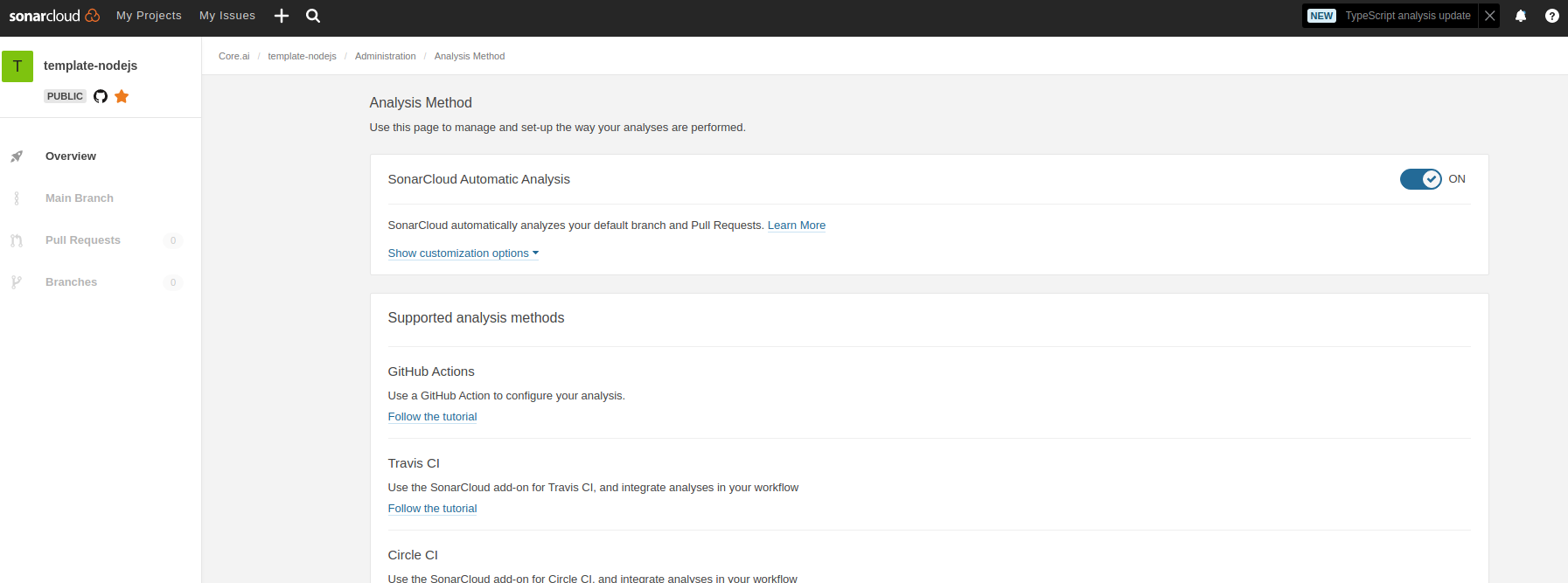

- Sonar cloud integration using

.sonarcloud.properties- In sonar cloud, enable Automatic analysis from

Administration Analysis Methodfor the first time

- In sonar cloud, enable Automatic analysis from

IDE setup

SonarLint is currently available as a free plugin for jetbrains, eclipse, vscode and visual studio IDEs. Use sonarLint plugin for webstorm or any of the available IDEs from this link before raising a pull request: https://www.sonarlint.org/ .

SonarLint static code analysis checker is not yet available as a Brackets extension.

Internals

Testing framework: Mocha , assertion style: chai

See https://mochajs.org/#getting-started on how to write tests Use chai for BDD style assertions (expect, should etc..). See move here: https://www.chaijs.com/guide/styles/#expect

Mocks and spies:

Since it is not that straight forward to mock es6 module imports, use the follow pull request as reference to mock imported libs:

- sample pull request: https://github.com/aicore/libcache/pull/6/files

- setting up mocks

- using the mocks

ensure to import

setup-mocks.jsas the first import of all files in tests.

using sinon lib if the above method doesn't fit your case

if you want to mock/spy on fn() for unit tests, use sinon. refer docs: https://sinonjs.org/

Note on coverage suite used here:

we use c8 for coverage https://github.com/bcoe/c8. Its reporting is based on nyc, so detailed docs can be found here: https://github.com/istanbuljs/nyc ; We didn't use nyc as it do not yet have ES module support see: https://github.com/digitalbazaar/bedrock-test/issues/16 . c8 is drop replacement for nyc coverage reporting tool